Content from Introduction

Last updated on 2025-11-07 | Edit this page

Overview

Questions

- What is machine learning?

- What are some useful machine learning techniques?

Objectives

- Gain an overview of what machine learning is and the techniques available.

- Understand how machine learning, deep learning, and artificial intelligence differ.

- Be aware of some caveats when using machine mearning.

What is machine learning?

Machine learning is a set of techniques that enable computers to use data to improve their performance in a given task. This is similar in concept to how humans learn to make predictions based upon previous experience and knowledge. Machine learning is “data-driven”, meaning that it uses the underlying statistics of a set of data to achieve a task.

Machine learning encompasses a wide range of tasks and activities, but broadly speaking it can be used to: find trends in a dataset, classify data into groups or categories, make predictions based upon data, and even “learn” how to interact with an environment when provided with goals to achieve.

Artificial intelligence vs machine learning

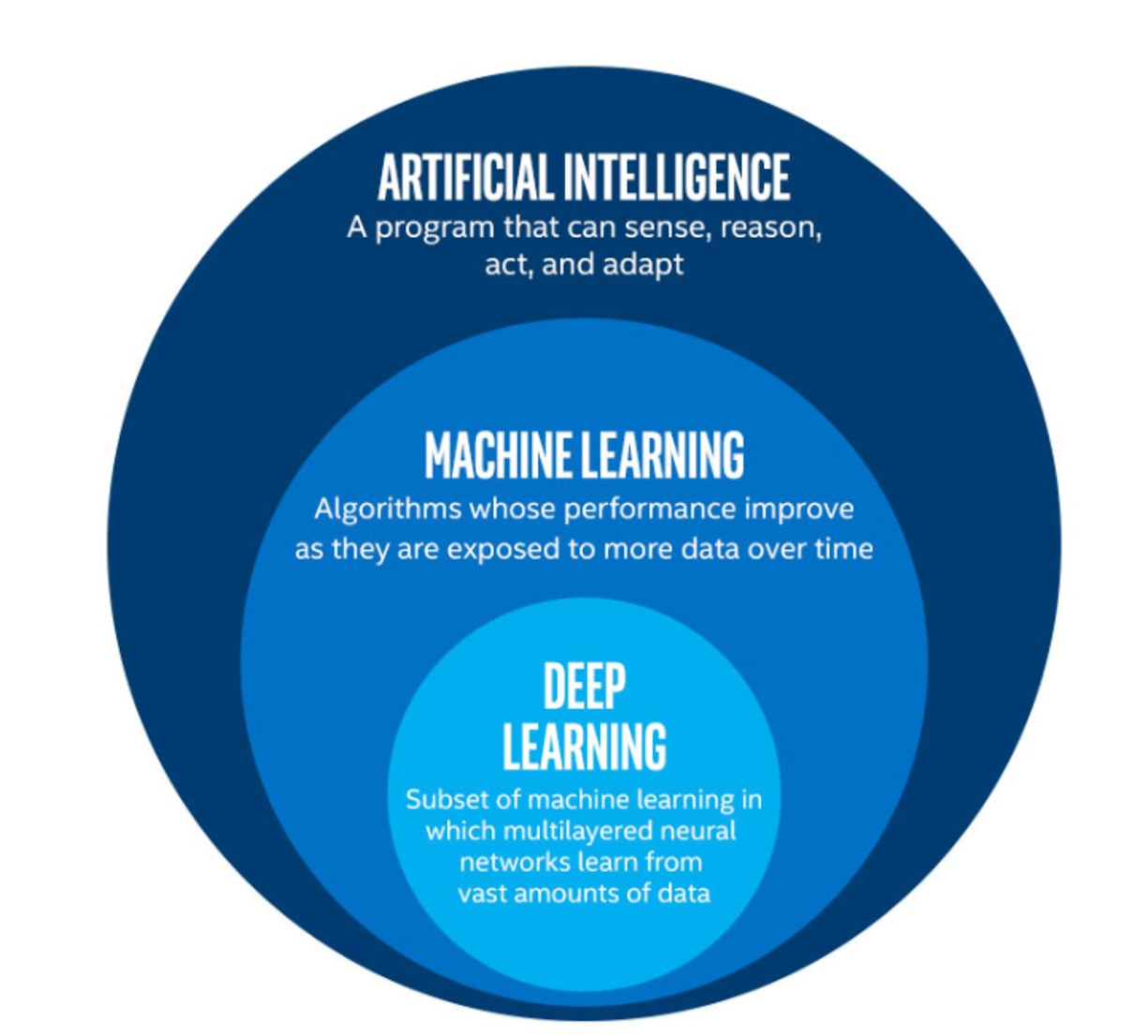

The term machine learning (ML) is often mentioned alongside artificial intelligence (AI) and deep learning (DL). Deep learning is a subset of machine learning, and machine learning is a subset of artificial intelligence.

AI is increasingly being used as a catch-all term to describe things that encompass ML and DL systems - from simple email spam filters, to more complex image recognition systems, to large language models such as ChatGPT. The more specific term “Artificial General Intelligence” (AGI) is used to describe a system possessing a “general intelligence” that can be applied to solve a diverse range of problems, often mimicking the behaviour of intelligent biological systems. Modern attempts at AGI are getting close to fooling humans, but while there have been great advances in AI research, human-like intelligence is only possible in a few specialist areas.

ML refers to techniques where a computer can “learn” patterns in data, usually by being shown many training examples. While ML algorithms can learn to solve specific problems, or multiple similar problems, they are not considered to possess a general intelligence. ML algorithms often need hundreds or thousands of examples to learn a task and are confined to activities such as simple classifications. A human-like system could learn much quicker than this, and potentially learn from a single example by using it’s knowledge of many other problems.

DL is a particular field of machine learning where algorithms called neural networks are used to create highly complex systems. Large collections of neural networks are able to learn from vast quantities of data. Deep learning can be used to solve a wide range of problems, but it can also require huge amounts of input data and computational resources to train.

The image below shows the relationships between artificial intelligence, machine learning and deep learning.

The image above is by Tukijaaliwa, CC BY-SA 4.0, via Wikimedia Commons,

original source

The image above is by Tukijaaliwa, CC BY-SA 4.0, via Wikimedia Commons,

original source

Machine learning in our daily lives

Machine learning has quickly become an important technology and is now frequently used to perform services we encounter in our daily lives. Here are just a few examples:

- Banks look for trends in transaction data to detect outliers that may be fraudulent

- Email inboxes use text to decide whether an email is spam or not, and adjust their rules based upon how we flag emails

- Travel apps use live and historic data to estimate traffic, travel times, and journey routes

- Retail companies and streaming services use data to recommend new content we might like based upon our demographic and historical preferences

- Image, object, and pattern recognition is used to identify humans and vehicles, capture text, generate subtitles, and much more

- Self-driving cars and robots use object detection and performance feedback to improve their interaction with the world

Where else have you encountered machine learning already?

Now that we have explored machine learning in a bit more detail, discuss with the person next to you: 1. Where else have I seen machine learning in use? 2. What kind of input data does that machine learning system use to make predictions/classifications? 3. Is there any evidence that your interaction with the system contributes to further training? 4. Do you have any examples of the system failing?

Limitations of machine learning

Like any other systems machine learning has limitations, caveats, and “gotchas” to be aware of that may impact the accuracy and performance of a machine learning system.

Garbage in = garbage out

There is a classic expression in computer science: “garbage in = garbage out”. This means that if the input data we use is garbage then the ouput will be too. If, for example, we try to use a machine learning system to find a link between two unlinked variables then it may well manage to produce a model attempting this, but the output will be meaningless.

Biases due to training data

The performance of a ML system depends on the breadth and quality of input data used to train it. If the input data contains biases or blind spots then these will be reflected in the ML system. For example, if we collect data on public transport use from only high socioeconomic areas, the resulting input data may be biased due to a range of factors that may increase the likelihood of people from those areas using private transport vs public options.

Extrapolation

We can only make reliable predictions about data which is in the same range as our training data. If we try to extrapolate beyond the boundaries of the training data we cannot be confident in our results. As we shall see some algorithms are better suited (or less suited) to extrapolation than others.

Getting started with Scikit-Learn

About Scikit-Learn

Scikit-Learn is a python package designed to give access to well-known machine learning algorithms within Python code, through a clean application programming interface (API). It has been built by hundreds of contributors from around the world, and is used across industry and academia.

Scikit-Learn is built upon Python’s NumPy (Numerical Python) and SciPy (Scientific Python) libraries, which enable efficient in-core numerical and scientific computation within Python. As such, Scikit-Learn is not specifically designed for extremely large datasets, though there is some work in this area. For this introduction to ML we are going to stick to processing small to medium datasets with Scikit-Learn, without the need for a graphical processing unit (GPU).

Like any other Python package, we can import Scikit-Learn and check the package version using the following Python commands:

Representation of Data in Scikit-learn

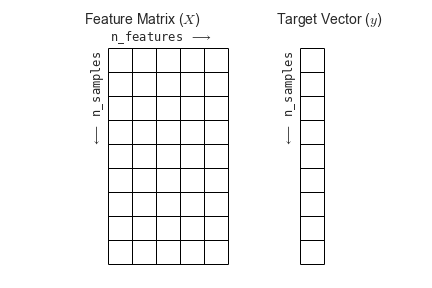

Machine learning is about creating models from data: for that reason, we’ll start by discussing how data can be represented in order to be understood by the computer.

Most machine learning algorithms implemented in scikit-learn expect data to be stored in a two-dimensional array or matrix. The arrays can be either numpy arrays, or in some cases scipy.sparse matrices. The size of the array is expected to be [n_samples, n_features]

We typically have a “Features Matrix” (usually referred to as the

code variable X) which are the “features” data we wish to

train on.

- n_samples: The number of samples. A sample can be a document, a picture, a sound, a video, an astronomical object, a row in database or CSV file, or whatever you can describe with a fixed set of quantitative traits.

- n_features: The number of features (variables) that can be used to describe each item in a quantitative manner. Features are generally real-valued, but may be boolean or discrete-valued in some cases.

If we want our ML models to make predictions or classifications, we

also provide “labels” as our expected “answers/results”. The model will

then be trained on the input features to try and match our provided

labels. This is done by providing a “Target Array” (usually referred to

as the code variable y) which contains the “labels or

values” that we wish to predict using the features data.

Figure from the Python Data

Science Handbook

Figure from the Python Data

Science Handbook

What will we cover today?

This lesson will introduce you to some of the key concepts and sub-domains of ML such as supervised learning, unsupervised learning, and neural networks.

The figure below provides a nice overview of some of the sub-domains of ML and the techniques used within each sub-domain. We recommend checking out the Scikit-Learn webpage for additional examples of the topics we will cover in this lesson. We will cover topics highlighted in blue: classical learning techniques such as regression, classification, clustering, and dimension reduction, as well as ensemble methods and a brief introduction to neural networks using perceptrons.

Image from Vasily

Zubarev via their blog with modifications in blue to denote lesson

content.

Image from Vasily

Zubarev via their blog with modifications in blue to denote lesson

content.

{% include links.md %}

- Machine learning is a set of tools and techniques that use data to make predictions.

- Artificial intelligence is a broader term that refers to making computers show human-like intelligence.

- Deep learning is a subset of machine learning.

- All machine learning systems have limitations to be aware of.

Content from Supervised methods - Regression

Last updated on 2025-11-07 | Edit this page

Overview

Questions

- What is supervised learning, and how does regression fit into it?

- How can we build, train, and evaluate a simple regression model in Scikit-Learn?

- Why do we split data into training and testing sets?

- How does data exploration and encoding affect model performance?

Objectives

- Load and inspect real-world data using Pandas and Seaborn.

- Prepare data for modeling by handling missing values and converting

categorical variables to numeric (dummy coding).

- Apply linear regression with Scikit-Learn to create a predictive

model.

- Split data into training and testing sets to evaluate generalization

performance.

- Measure and interpret model accuracy using error metrics (e.g.,

RMSE).

- Explore how polynomial regression extends linear models to capture

non-linear relationships.

- Recognize signs of overfitting and understand why exploratory data analysis (EDA) is critical before modeling.

Supervised learning

Classical machine learning is often divided into two categories – supervised and unsupervised learning.

For the case of supervised learning we act as a “supervisor” or “teacher” for our ML algorithms by providing the algorithm with “labelled data” that contains example answers of what we wish the algorithm to achieve.

For instance, if we wish to train our algorithm to distinguish between images of cats and dogs, we would provide our algorithm with images that have already been labelled as “cat” or “dog” so that it can learn from these examples. If we wished to train our algorithm to predict house prices over time we would provide our algorithm with example data of datetime values that are “labelled” with house prices.

Supervised learning is split up into two further categories: classification and regression. For classification the labelled data is discrete, such as the “cat” or “dog” example, whereas for regression the labelled data is continuous, such as the house price example.

In this episode we will explore how we can use regression to build a “model” that can be used to make predictions.

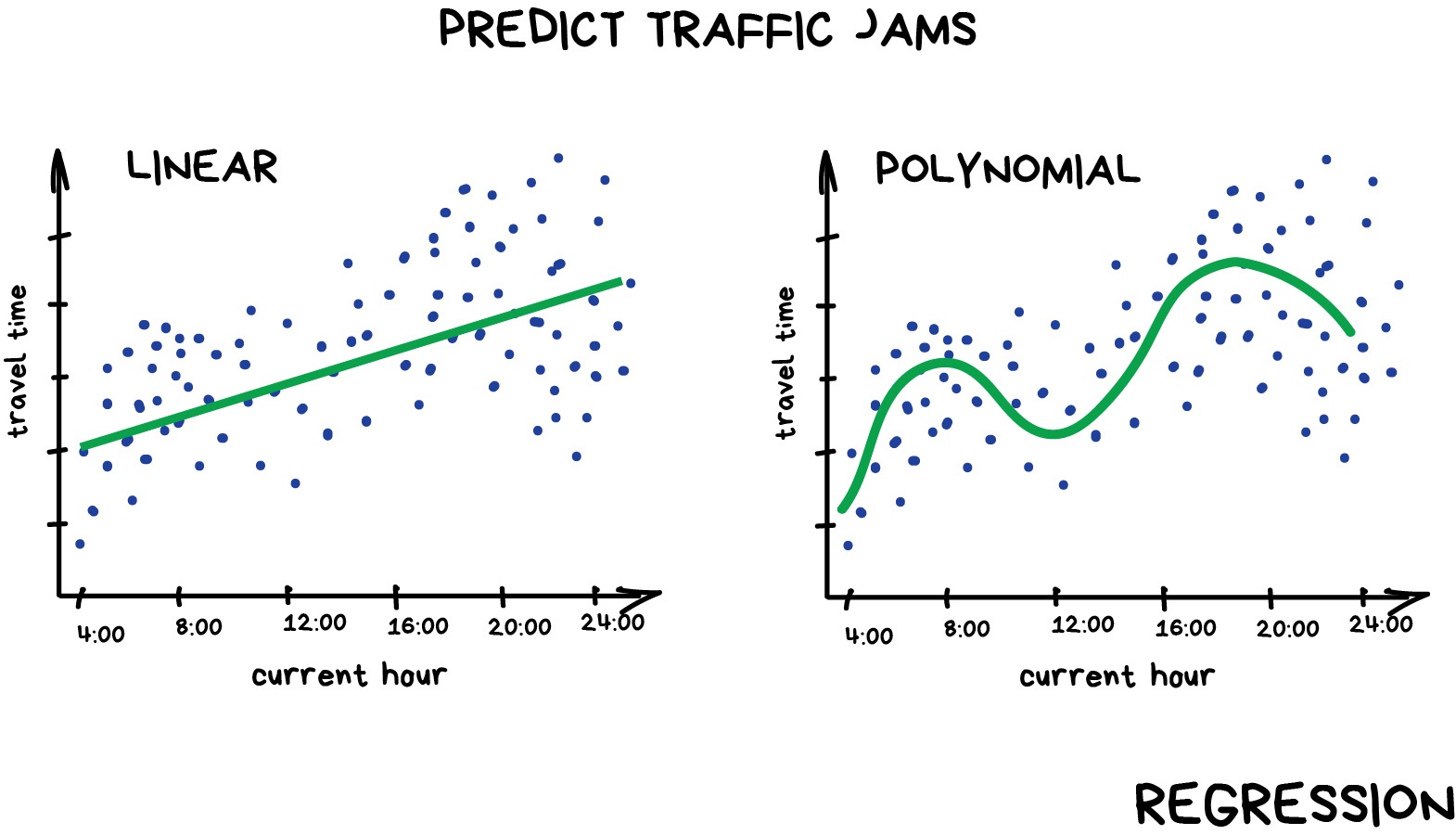

Regression

Regression is a statistical technique that relates a dependent variable (a label or target variable in ML terms) to one or more independent variables (features in ML terms). A regression model attempts to describe this relation by fitting the data as closely as possible according to mathematical criteria. This model can then be used to predict new labelled values by inputting the independent variables into it. For example, if we create a house price model we can then feed in any datetime value we wish, and get a new house price value prediction.

Regression can be as simple as drawing a “line of best fit” through data points, known as linear regression, or more complex models such as polynomial regression, and is used routinely around the world in both industry and research. You may have already used regression in the past without knowing that it is also considered a machine learning technique!

Linear regression using Scikit-Learn

We’ve had a lot of theory so time to start some actual coding! Let’s create a regression model on some penguin data available through the Python plotting library Seaborn.

Let’s start by loading in and examining the penguin dataset, which containing a few hundred samples and a number of features and labels.

PYTHON

# !pip install seaborn if import fails, run this first

import seaborn as sns

dataset = sns.load_dataset("penguins")

print(dataset.shape)

dataset.head()We can see that we have seven columns in total: 4 continuous

(numerical) columns named bill_length_mm,

bill_depth_mm, flipper_length_mm, and

body_mass_g; and 3 discrete (categorical) columns named

species, island, and sex. We can

also see from a quick inspection of the first 5 samples that we have

some missing data in the form of NaN values. Missing data

is a fairly common occurrence in real-life data, so let’s go ahead and

remove any rows that contain NaN values:

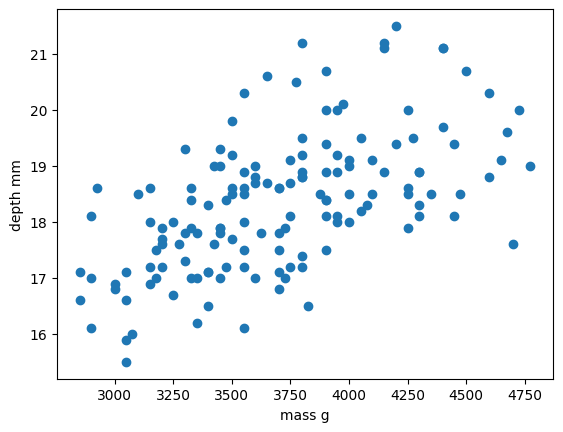

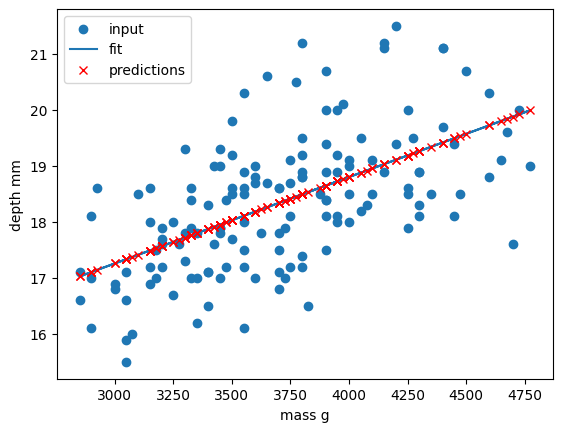

In this scenario we will train a linear regression model using

body_mass_g as our feature data and

bill_depth_mm as our label data. We will train our model on

a subset of the data by slicing the first 146 samples of our cleaned

data.

In machine learning we often train our models on a subset of data, for reasons we will explain later in this lesson, so let us extract a subset of data to work on by slicing the first 146 samples of our cleaned data and extracting our feature and label data:

PYTHON

import matplotlib.pyplot as plt

train_data = dataset[:146] # first 146 rows

x_train = train_data["body_mass_g"]

y_train = train_data["bill_depth_mm"]

plt.scatter(x_train, y_train)

plt.xlabel("mass g")

plt.ylabel("depth mm")

plt.show()

In this regression example we will create a Linear Regression model

that will try to predict y values based upon x

values.

In machine learning terminology: we will use our x

feature (variable) and y labels(“answers”) to train our

Linear Regression model to predict y values when provided

with x values.

The mathematical equation for a linear fit is y = mx + c

where y is our label data, x is our input

feature(s), m represents the slope of the linear fit, and

c represents the intercept with the y-axis.

A typical ML workflow is as following:

- Decide on a model to use (also known as an estimator)

- Tweak your data into the required format for your model

- Define and train your model on the input data

- Predict some values using the trained model

- Check the accuracy of the prediction, and visualise the result

We have already decided to use a linear regression model, so we’ll now pre-process our data into a format that Scikit-Learn can use.

Let’s check our current x/y types and shapes.

PYTHON

import numpy as np

# sklearn requires a 2D array, so lets reshape our 1D arrays from (N) to (N,).

x_train = np.array(x_train).reshape(-1, 1)

y_train = np.array(y_train).reshape(-1, 1)

print(x_train.shape)

print(y_train.shape)Next we’ll define a model, and train it on the pre-processed data. We’ll also inspect the trained model parameters m and c:

PYTHON

# load the LinearRegression class from sklearn.linear_model

from sklearn.linear_model import LinearRegression

help(LinearRegression)PYTHON

# inspect the trained estimator/model parameters

m = lin_regress.coef_

c = lin_regress.intercept_

print("linear coefs=", m, c)Now we can make predictions using our trained model, and calculate the Root Mean Squared Error (RMSE) of our predictions:

PYTHON

import math

from sklearn.metrics import mean_squared_error

# Predict some values using our trained estimator/model.

# In this case we predict our input data to evaluate accuracy!

y_train_pred = lin_regress.predict(x_train)

# display first 10 predicted values for bill depth

y_train_pred[0:10]PYTHON

# calculated a RMS error as a quality of fit metric

train_error = math.sqrt(mean_squared_error(y_train, y_train_pred))

print("train RMSE =", train_error)Finally, we’ll plot our input data, our linear fit, and our predictions:

PYTHON

plt.scatter(x_train, y_train, label="input")

plt.plot(x_train, y_train_pred, "-", label="fit")

plt.plot(x_train, y_train_pred, "rx", label="predictions")

plt.xlabel("body_mass_g")

plt.ylabel("bill_depth_mm")

plt.legend()

plt.show()

Congratulations! We’ve now created our first machine-learning model

of the lesson and we can now make predictions of

bill_depth_mm for any body_mass_g values that

we pass into our model.

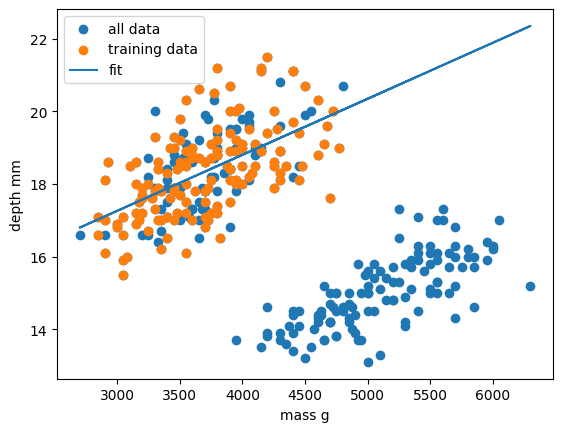

Let’s provide the model with all of the penguin samples and see how our model performs on the full dataset:

PYTHON

# Extract remaining observations for testing

test_data = dataset[146:] # row 147 -> end

x_test = test_data["body_mass_g"] # lowercase x since there is only one predictor

y_test = test_data["bill_depth_mm"] # lowercase y since there is only one target variable

# sklearn requires a 2D array, so lets reshape our 1D arrays from (N) to (N,).

x_test = np.array(x_test).reshape(-1, 1)

y_test = np.array(y_test).reshape(-1, 1)

# Predict values using our trained estimator/model from earlier

y_test_pred = lin_regress.predict(x_test)

# calculated a RMSE error for all data

test_error = math.sqrt(mean_squared_error(y_test, y_test_pred))

print("test RMSE =", test_error)Our RMSE for predictions on all penguin samples is far larger than before, so let’s visually inspect the situation:

PYTHON

plt.scatter(x_train, y_train, label="train")

plt.scatter(x_test, y_test, label="test")

plt.plot(x_train, y_train_pred, "-", label="fit")

# plt.plot(x_train, y_train_pred, "rx", label="predictions")

plt.xlabel("body_mass_g")

plt.ylabel("bill_depth_mm")

plt.legend()

plt.show()

Oh dear. It looks like our linear regression fits okay for our subset of the penguin data, and a few additional samples, but there appears to be a cluster of points that are poorly predicted by our model. Even if we re-trained our model using all samples it looks unlikely that our model would perform much better due to the two-cluster nature of our dataset.

This is a classic machine learning scenario known as overffitting

We have trained our model on a specific set of data, and our model has learnt to reproduce those specific answers at the expense of creating a more generally-applicable model. Overfitting is the ML equivalent of learning an exam papers mark scheme off by heart, rather than understanding and answering the questions. Overfitting is especially prevalent when you have (A) limited data, and/or (B) complicated/large models with lots of trainable parameters (e..g, neural nets).

In this episode we chose to create a regression model for

bill_depth_mm versus body_mass_g predictions

without understanding our penguin dataset. While we proved we

can make a model by doing this we also saw that the model is

flawed due to complexity in the data that we did not account for.

At least two interpretrations of these results:

- Bill depth really does simply decrease with increasing body mass, and we just missed the larger story by zooming in on a subset of the data

- Bill depth generally increases with body mass, but with another covariate producing somewhat unique distributions/clusters across this axis.

Let’s assume for a moment that we only have access to the two variables, body mass and bill depth. In this scenario, we may want a model that captures the global trend of bill depth decreasing with body mass. For this, we need to revisit how we split our data into train/test sets. Sklearn provides a tool to make it easy to split into these subsets using random shuffling of observations.

PYTHON

from sklearn.model_selection import train_test_split

x = dataset['body_mass_g']

y = dataset['bill_depth_mm']

# # sklearn requires a 2D array, so lets reshape our 1D arrays from (N) to (N,).

x = np.array(x).reshape(-1, 1)

y = np.array(y).reshape(-1, 1)

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=0)Exercise: Try to re-implement our univariate regression model using these new train/test sets.

Follow these steps:

- Define your estimator model

- Train the model using .fit()

- Get predictions from the model using .predict

- Calculate RMSE for train/test

- Plot scatter plot of train/test data, with line of best fit

PYTHON

from sklearn.linear_model import LinearRegression

# Define our estimator/model

model = LinearRegression(fit_intercept=True)

# train our estimator/model using our data

lin_regress = model.fit(x_train, y_train)

# get preds and calculated a RMS error for train data

y_train_pred = lin_regress.predict(x_train)

train_error = math.sqrt(mean_squared_error(y_train, y_train_pred))

print("train RMSE =", train_error)

# get preds and calculated a RMS error for test data

y_test_pred = lin_regress.predict(x_test)

test_error = math.sqrt(mean_squared_error(y_test, y_test_pred))

print("test RMSE =", test_error)

# scatter plot

plt.scatter(x_train, y_train, label="train")

plt.scatter(x_test, y_test, label="test")

plt.plot(x_train, y_train_pred, "-", label="fit")

# plt.plot(x_train, y_train_pred, "rx", label="predictions")

plt.xlabel("body_mass_g")

plt.ylabel("bill_depth_mm")

plt.legend()

plt.show()Quick follow-up: Interpret the results of your model. Is it accurate? What does it say about the relationship between body mass and bill depth? Is this a “good” model?

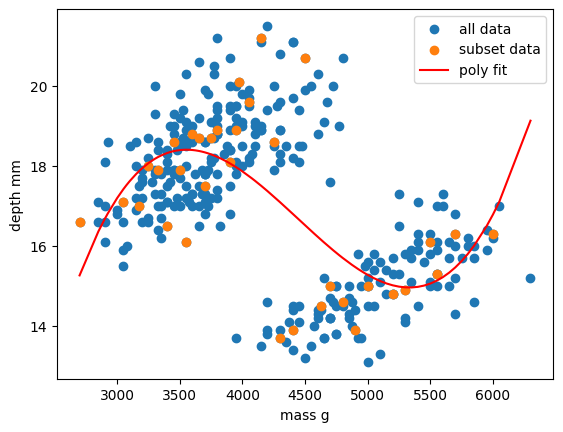

Repeating the regression with different estimators

The goal of this lesson isn’t to build a generalisable

bill_depth_mm versus body_mass_g model for the

penguin dataset - the goal is to give you some hands-on experience

building machine learning models with scikit-learn. So let’s repeat the

above but this time using a polynomial function.

Polynomial functions are non-linear functions that are commonly-used

to model data. Mathematically they have N degrees of

freedom and they take the following form

y = a + bx + cx^2 + dx^3 ... + mx^N. If we have a

polynomial of degree N=1 we once again return to a linear

equation y = a + bx or as it is more commonly written

y = mx + c.

We’ll follow the same workflow from before: * Decide on a model to use model (also known as an estimator) * Tweak your data into the required format for your model * Define and train your model on the input data * Predict some values using the trained model * Check the accuracy of the prediction, and visualise the result

We’ve decided to use a Polynomial estimator, so now let’s tweak our

dataset into the required format. For polynomial estimators in

Scikit-Learn this is done in two steps. First we pre-process our input

data x_train into a polynomial representation using the

PolynomialFeatures function. Then we can create our

polynomial regressions using the LinearRegression().fit()

function as before, but this time using the polynomial representation of

our x_train.

PYTHON

from sklearn.preprocessing import PolynomialFeatures

# create a polynomial representation of our training data

poly_features = PolynomialFeatures(degree=2)

x_train_poly = poly_features.fit_transform(x_train)

x_test_poly = poly_features.transform(x_test)We convert a non-linear problem into a linear one

By converting our input feature data into a polynomial representation we can now solve our non-linear problem using linear techniques. This is a common occurence in machine learning as linear problems are far easier computationally to solve. We can treat this as just another pre-processing step to manipulate our features into a ML-ready format.

We are now ready to create and train our model using our polynomial feature data.

PYTHON

# Define our estimator/model(s) and train our model

poly_regress = LinearRegression()

poly_regress.fit(x_train_poly, y_train)We can now make predictions on train/test sets, and calculate RMSE

PYTHON

# Predictions

y_train_pred = poly_regress.predict(x_train_poly)

y_test_pred = poly_regress.predict(x_test_poly)

poly_train_error = math.sqrt(mean_squared_error(y_train_pred, y_train))

print("poly train error =", poly_train_error)

poly_test_error = math.sqrt(mean_squared_error(y_test_pred, y_test))

print("poly train error =", poly_test_error)Finally, let’s visualise our model fit on our training data and full dataset.

PYTHON

# Scatter plots for train and test data

plt.scatter(x_train, y_train, label='Train', color='blue', alpha=0.6)

plt.scatter(x_test, y_test, label='Test', color='red', alpha=0.6)

# Plot the model fit

x_range = np.linspace(min(x), max(x), 500).reshape(-1, 1)

y_range_pred = poly_regress.predict(poly_features.transform(x_range))

plt.plot(x_range, y_range_pred, label='Polynomial Model Fit', color='green', linewidth=2)

# Labels and legend

plt.xlabel("mass g")

plt.ylabel("depth mm")

plt.title('Polynomial Regression with Training and Testing Data')

plt.legend()

Exercise: Vary your polynomial degree to try and improve fitting

Adjust the degree=3 input variable for the

PolynomialFeatures function to change the degree of

polynomial fit. Can you improve the RMSE of your model?

Zooming back out: the importance of EDA

While polynomial regression may help to a degree here, it isn’t an ideal solution. Whenever you see multiple distict clusters in your data, you should ask yourself what hidden variable might be causing additional clusters to appear, and move on to exploring multivariable models (models with more than one input feature). When we investigate additional features from our data, we are able to see the larger picture that describes how input variables relate to whatever target variable we are interested in.

When you are doing any kind of modeling work, it is critical to spend your first few hours/days/weeks simply exploring the data. This means: - Investigate pairwise relationships between “predictors” (X) - Investigate correlation between predictors - Plot distributions of each variable - Check for outliers - Check for NaNs

PYTHON

# Create the pairs plot

sns.pairplot(dataset, vars=["body_mass_g", "bill_depth_mm"], hue="species", diag_kind="kde", markers=["o", "s", "D"])

plt.show()Let’s try a model that includes penguin species as a predictor.

PYTHON

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

# Load the penguins dataset

# (replace this with your own dataset import if needed)

from seaborn import load_dataset

dataset = load_dataset('penguins')

# Drop rows with missing values in relevant columns

dataset = dataset.dropna(subset=['body_mass_g', 'bill_depth_mm', 'species'])

# Define predictors and target

X = dataset[['body_mass_g', 'species']] # conventionally, we use capital X when there are multiple predictors

y = dataset['bill_depth_mm']Since the species column is coded as a string, we need to convert it into a numerical format before we can use it in a machine learning model. To do this, we apply dummy coding (also called one-hot encoding), which creates new binary columns for each species category (e.g., species_Adelie, species_Chinstrap, species_Gentoo). Each row gets a 1 in the column that matches its species and 0 in the others.

By default, we drop the first category to avoid multicollinearity—this means the omitted category serves as the reference group when interpreting model coefficients.

PYTHON

# One-hot encode species (drop_first avoids multicollinearity)

X_dummies = pd.get_dummies(X, columns=['species'], drop_first=True)

X_dummiesWe can than train/fit and evaluate our model as usual.

PYTHON

# Train/test split

X_train, X_test, y_train, y_test = train_test_split(X_dummies, y, test_size=0.2, random_state=0)

# Fit a linear regression model

model = LinearRegression()

model.fit(X_train, y_train)

# Predict and evaluate

y_pred = model.predict(X_test)

rmse = mean_squared_error(y_test, y_pred)

print(f"RMSE with species as a predictor: {rmse:.2f}")

# Optional: view learned coefficients

coefficients = pd.Series(model.coef_, index=X_dummies.columns)

print("\nModel coefficients:")

print(coefficients){% include links.md %}

- A supervised learning pipeline includes data loading, cleaning,

feature selection, training, and testing.

- Scikit-Learn provides simple, consistent tools for regression, model

fitting, and performance evaluation.

- Always split data into train and test sets to avoid overfitting and

to assess model generalization.

- Dummy coding (one-hot encoding) converts categorical variables into

a numeric form usable by ML models.

- Polynomial regression can capture non-linear trends by expanding

features into polynomial terms.

- Early exploratory data analysis (EDA) helps reveal relationships,

clusters, and potential predictors before modeling.

- Overfitting occurs when a model learns noise instead of signal—simpler models and good splits help mitigate this.

Content from Supervised methods - Classification

Last updated on 2025-11-07 | Edit this page

Overview

Questions

- What is classification, and how does it differ from regression?

- How can we use Scikit-Learn to train and evaluate a classification model?

- Why and how do we split data into training and testing sets for classification?

- What are hyperparameters, and how do they affect model performance?

- When should I standardize my data, and when is it safe to skip this step?

Objectives

- Define supervised classification and explain how it differs from (supervised) regression.

- Load and inspect the penguins dataset, selecting appropriate numeric features and labels.

- Create train/test splits for classification and explain why stratification and shuffling matter.

- Train and evaluate a decision tree classifier using Scikit-Learn.

- Describe what a hyperparameter is and explore how changing

max_depthaffects a decision tree. - Visualize decision boundaries for a simple classifier to build intuition about how it separates classes.

- Standardize features and train an SVM classifier, comparing its performance to the decision tree.

- Explain which types of models benefit from feature scaling (standardization) and which generally do not.

- Recognize signs of overfitting in classification models and relate them to model complexity and data quality.

Classification

Classification is a supervised method to recognise and group data objects into a pre-determined categories. Where regression uses labelled observations to predict a continuous numerical value, classification predicts a discrete categorical fit to a class. Classification in ML leverages a wide range of algorithms to classify a set of data/datasets into their respective categories.

In this episode we are going to introduce the concept of supervised classification by classifying penguin data into different species of penguins using Scikit-Learn.

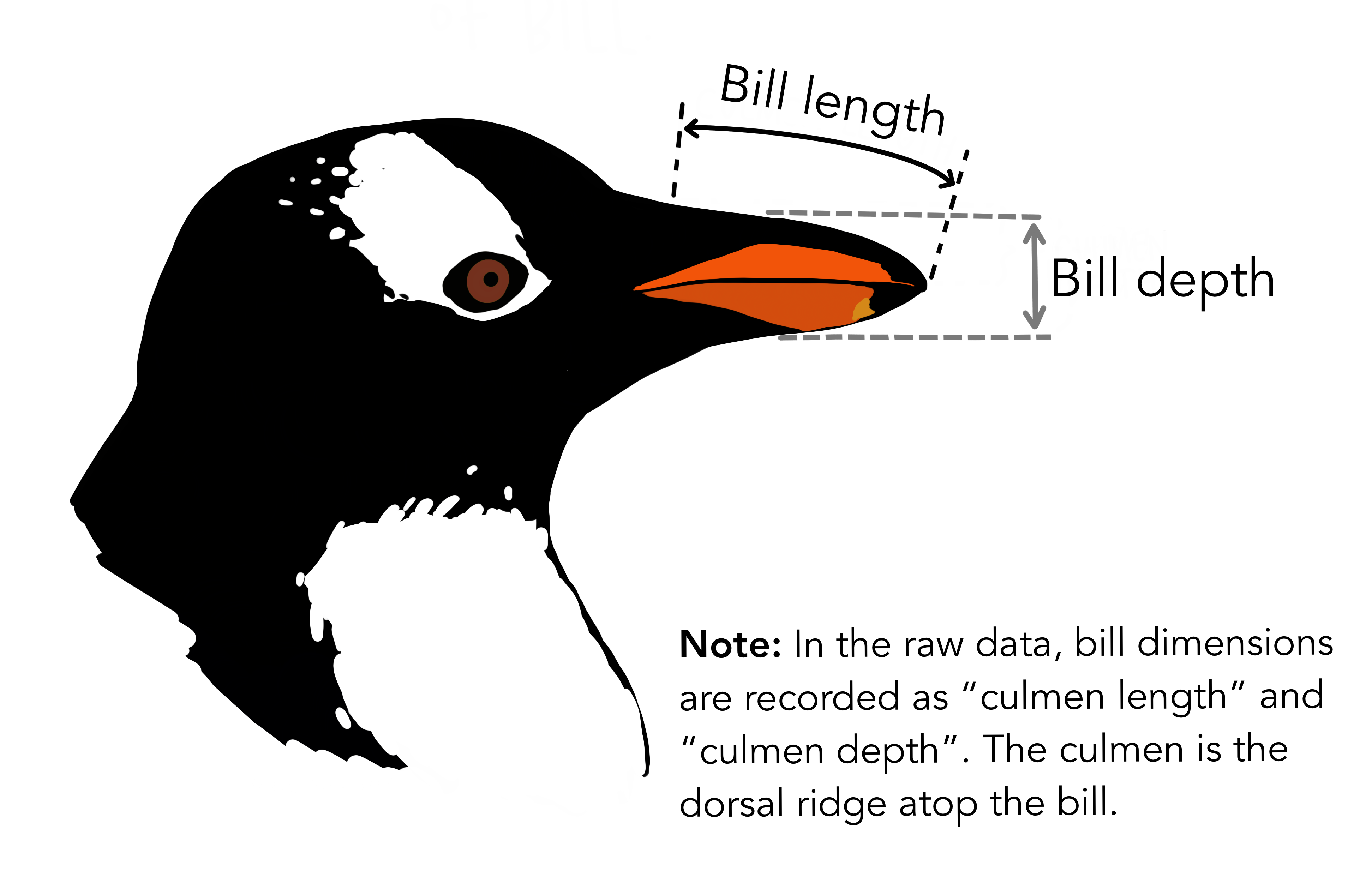

The penguins dataset

We’re going to be using the penguins dataset of Allison Horst, published here, The dataset contains 344 size measurements for three penguin species (Chinstrap, Gentoo and Adélie) observed on three islands in the Palmer Archipelago, Antarctica.

The physical attributes measured are flipper length, beak length,

beak width, body mass, and sex.

In other words, the dataset contains 344 rows with 7 features i.e. 5 physical attributes, species and the island where the observations were made.

Our aim is to develop a classification model that will predict the species of a penguin based upon measurements of those variables.

As a rule of thumb for ML/DL modelling, it is best to start with a simple model and progressively add complexity in order to meet our desired classification performance.

For this lesson we will limit our dataset to only numerical values such as bill_length, bill_depth, flipper_length, and body_mass while we attempt to classify species.

The above table contains multiple categorical objects such as species. If we attempt to include the other categorical fields, island and sex, we might hinder classification performance due to the complexity of the data.

Preprocessing our data

Lets do some pre-processing on our dataset and specify our

X features and y labels:

PYTHON

# Extract the data we need

feature_names = ['bill_length_mm', 'bill_depth_mm', 'flipper_length_mm', 'body_mass_g']

dataset.dropna(subset=feature_names, inplace=True)

class_names = dataset['species'].unique()

X = dataset[feature_names]

y = dataset['species']Having extracted our features X and labels

y, we can now split the data using the

train_test_split function.

Training-testing split

When undertaking any machine learning project, it’s important to be able to evaluate how well your model works.

Rather than evaluating this manually we can instead set aside some of our training data, usually 20% of our training data, and use these as a testing dataset. We then train on the remaining 80% and use the testing dataset to evaluate the accuracy of our trained model.

We lose a bit of training data in the process, But we can now easily evaluate the performance of our model. With more advanced test-train split techniques we can even recover this lost training data!

Why do we do this?

It’s important to do this early, and to do all of your work with the training dataset - this avoids any risk of you introducing bias to the model based on your own manual observations of data in the testing set (afterall, we want the model to make the decisions about parameters!). This can also highlight when you are over-fitting on your training data.

How we split the data into training and testing sets is also extremely important. We need to make sure that our training data is representitive of both our test data and actual data.

For classification problems this means we should ensure that each class of interest is represented proportionately in both training and testing sets. For regression problems we should ensure that our training and test sets cover the range of feature values that we wish to predict.

In the previous regression episode we created the penguin training data by taking the first 146 samples our the dataset. Unfortunately the penguin data is sorted by species and so our training data only considered one type of penguin and thus was not representitive of the actual data we tried to fit. We could have avoided this issue by randomly shuffling our penguin samples before splitting the data.

When not to shuffle your data

Sometimes your data is dependant on it’s ordering, such as time-series data where past values influence future predictions. Creating train-test splits for this can be tricky at first glance, but fortunately there are existing techniques to tackle this (often called stratification): See Scikit-Learn for more information.

We specify the fraction of data to use as test data, and the function randomly shuffles our data prior to splitting:

PYTHON

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)We’ll use X_train and y_train to develop

our model, and only look at X_test and y_test

when it’s time to evaluate its performance.

Visualising the data

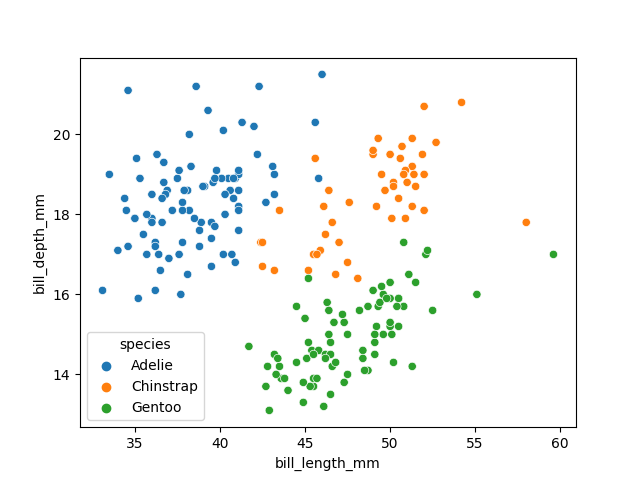

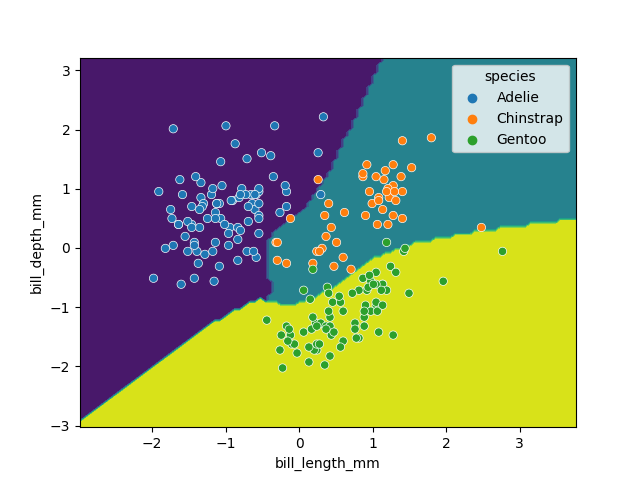

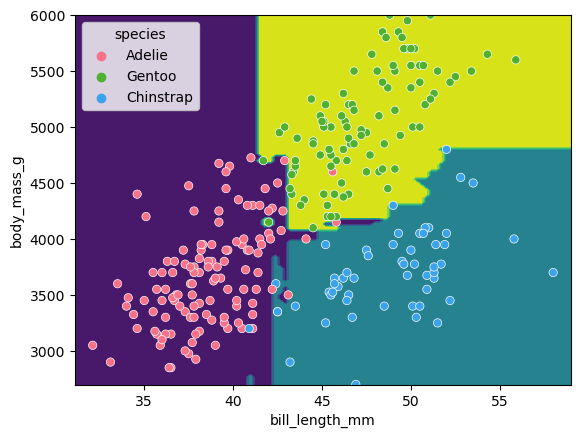

In order to better understand how a model might classify this data, we can first take a look at the data visually, to see what patterns we might identify.

PYTHON

import matplotlib.pyplot as plt

fig01 = sns.scatterplot(X_train, x=feature_names[0], y=feature_names[1], hue=dataset['species'])

plt.show()

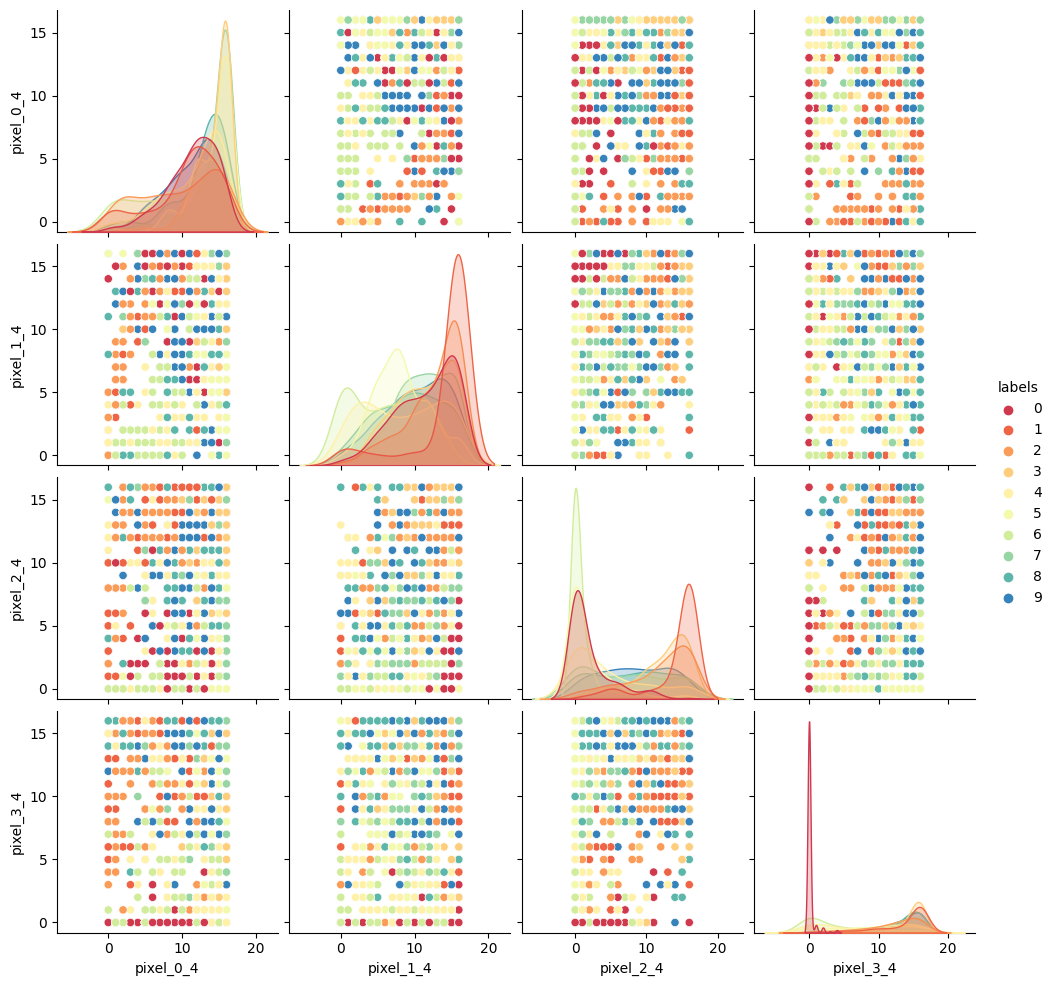

As there are four measurements for each penguin, we need quite a few plots to visualise all four dimensions against each other. Here is a handy Seaborn function to do so:

We can see that penguins from each species form fairly distinct spatial clusters in these plots, so that you could draw lines between those clusters to delineate each species. This is effectively what many classification algorithms do. They use the training data to delineate the observation space, in this case the 4 measurement dimensions, into classes. When given a new observation, the model finds which of those class areas the new observation falls in to.

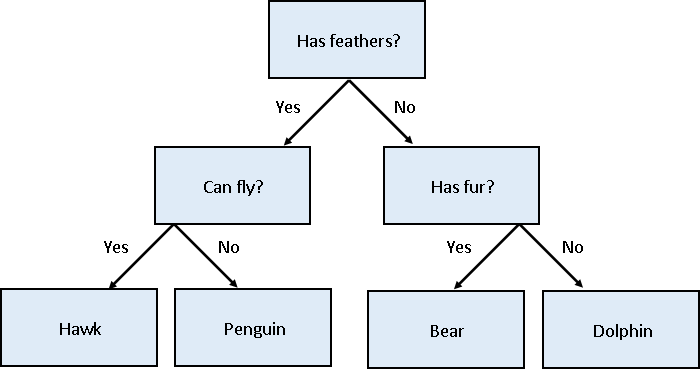

Classification using a decision tree

We’ll first apply a decision tree classifier to the data. Decisions trees are conceptually similar to flow diagrams (or more precisely for the biologists: dichotomous keys). They split the classification problem into a binary tree of comparisons, at each step comparing a measurement to a value, and moving left or right down the tree until a classification is reached.

Training and using a decision tree in Scikit-Learn is straightforward:

PYTHON

from sklearn.tree import DecisionTreeClassifier, plot_tree

clf = DecisionTreeClassifier(max_depth=2)

clf.fit(X_train, y_train)

clf.predict(X_test)Hyper-parameters: parameters that tune a model

‘Max Depth’ is an example of a hyper-parameter for the decision tree model. Where models use the parameters of an observation to predict a result, hyper-parameters are used to tune how a model works. Each model you encounter will have its own set of hyper-parameters, each of which affects model behaviour and performance in a different way. The process of adjusting hyper-parameters in order to improve model performance is called hyper-parameter tuning.

We can conveniently check how our model did with the .score() function, which will make predictions and report what proportion of them were accurate:

Our model reports an accuracy of ~98% on the test data! We can also look at the decision tree that was generated:

PYTHON

fig = plt.figure(figsize=(12, 10))

plot_tree(clf, class_names=class_names, feature_names=feature_names, filled=True, ax=fig.gca())

plt.show()

The first first question (depth=1) splits the training

data into “Adelie” and “Gentoo” categories using the criteria

flipper_length_mm <= 206.5, and the next two questions

(depth=2) split the “Adelie” and “Gentoo” categories into

“Adelie & Chinstrap” and “Gentoo & Chinstrap” predictions.

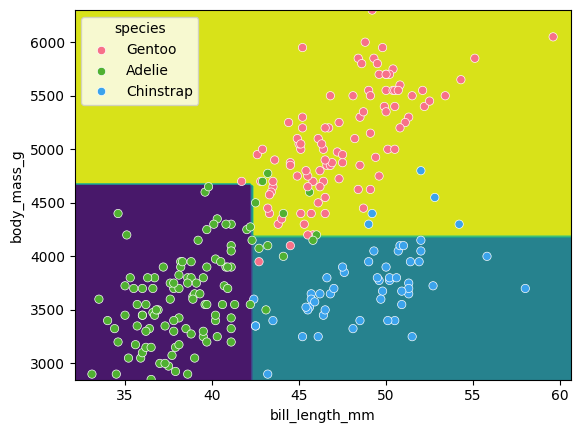

Visualising the classification space

We can visualise the classification space (decision tree boundaries) to get a more intuitive feel for what it is doing.Note that our 2D plot can only show two parameters at a time, so we will quickly visualise by training a new model on only 2 features:

PYTHON

from sklearn.inspection import DecisionBoundaryDisplay

f1 = feature_names[0]

f2 = feature_names[3]

clf = DecisionTreeClassifier(max_depth=2)

clf.fit(X_train[[f1, f2]], y_train)

d = DecisionBoundaryDisplay.from_estimator(clf, X_train[[f1, f2]])

sns.scatterplot(X_train, x=f1, y=f2, hue=y_train, palette="husl")

plt.show()

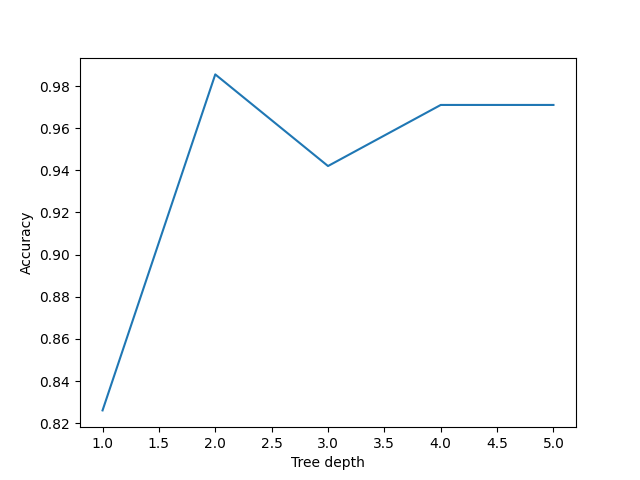

Tuning the max_depth hyperparameter

Our decision tree using a max_depth=2 is fairly simple

and there are still some incorrect predictions in our final

classifications. Let’s try varying the max_depth

hyperparameter to see if we can improve our model predictions.

PYTHON

import pandas as pd

max_depths = [1, 2, 3, 4, 5]

accuracy = []

for i, d in enumerate(max_depths):

clf = DecisionTreeClassifier(max_depth=d)

clf.fit(X_train, y_train)

acc = clf.score(X_test, y_test)

accuracy.append((d, acc))

acc_df = pd.DataFrame(accuracy, columns=['depth', 'accuracy'])

sns.lineplot(acc_df, x='depth', y='accuracy')

plt.xlabel('Tree depth')

plt.ylabel('Accuracy')

plt.show()

Here we can see that a max_depth=2 performs slightly

better on the test data than those with max_depth > 2.

This can seem counter intuitive, as surely more questions should be able

to better split up our categories and thus give better predictions?

Let’s reuse our fitting and plotting codes from above to inspect a

decision tree that has max_depth=5:

PYTHON

clf = DecisionTreeClassifier(max_depth=5)

clf.fit(X_train, y_train)

fig = plt.figure(figsize=(12, 10))

plot_tree(clf, class_names=class_names, feature_names=feature_names, filled=True, ax=fig.gca())

plt.show()

It looks like our decision tree has split up the training data into

the correct penguin categories and more accurately than the

max_depth=2 model did, however it used some very specific

questions to split up the penguins into the correct categories. Let’s

try visualising the classification space for a more intuitive

understanding:

PYTHON

f1 = feature_names[0]

f2 = feature_names[3]

clf = DecisionTreeClassifier(max_depth=5)

clf.fit(X_train[[f1, f2]], y_train)

d = DecisionBoundaryDisplay.from_estimator(clf, X_train[[f1, f2]])

sns.scatterplot(X_train, x=f1, y=f2, hue=y_train, palette='husl')

plt.show()

Earlier we saw that the max_depth=2 model split the data

into 3 simple bounding boxes, whereas for max_depth=5 we

see the model has created some very specific classification boundaries

to correctly classify every point in the training data.

This is a classic case of over-fitting - our model has produced extremely specific parameters that work for the training data but are not representitive of our test data. Sometimes simplicity is better!

Exercise: Adding noise to the training data

We observed that this data doesn’t seem prone to overfitting effects. Why might this be? There are at least two factors contributing to these results:

- We only have 4 predictors. With so few predictors, there are only so

many unique tree structures that can be tested/formed. This makes

overfitting less likely.

- Our data is sourced from a Python library, and has been cleaned/vetted. Real-world data typically has more noise.

Let’s try adding a small amount of noise to the data using the code below. How does this impact the ideal setting for the tree depth?

PYTHON

# 1) LOAD DATA (if not loaded already)

import seaborn as sns

dataset = sns.load_dataset("penguins")

dataset.head()

# 2) Extract the data we need and drop NaNs (if not done already)

feature_names = ["bill_length_mm", "bill_depth_mm", "flipper_length_mm", "body_mass_g"]

dataset.dropna(subset=feature_names, inplace=True)

class_names = dataset["species"].unique()

X = dataset[feature_names]

y = dataset["species"]

# 3) ADD RANDOM NOISE TO X

import numpy as np

stds = X.std(axis=0).to_numpy()

# Generate noise and scale it

# Set seed for reproducibility

np.random.seed(42)

noise = np.random.normal(0, 1, X.shape) # sample numbers from normal distribution

scaled_noise = noise * stds # noise up to 1 standard deviation

X_noisy = X + scaled_noise

import matplotlib.pyplot as plt

fig01 = sns.scatterplot(X, x=feature_names[0], y=feature_names[1], hue=dataset["species"])

plt.show()

fig02 = sns.scatterplot(X_noisy, x=feature_names[0], y=feature_names[1], hue=dataset["species"])

plt.show()

# 4) TRAIN/TEST SPLIT

from sklearn.model_selection import train_test_split

# X_train, X_test, y_train, y_test = train_test_split(

# X, y, test_size=0.2, random_state=0, stratify=y

# )

X_train, X_test, y_train, y_test = train_test_split(

X_noisy, y, test_size=0.2, random_state=0, stratify=y

)

# 5) HYPERPARAMETER TUNING

from sklearn.tree import DecisionTreeClassifier

import pandas as pd

max_depths = list(range(1, 200))

accuracy = []

for d in max_depths:

clf = DecisionTreeClassifier(max_depth=d)

clf.fit(X_train, y_train)

acc = clf.score(X_test, y_test)

accuracy.append((d, acc))

acc_df = pd.DataFrame(accuracy, columns=["depth", "accuracy"])

sns.lineplot(acc_df, x="depth", y="accuracy")

plt.xlabel("Tree depth")

plt.ylabel("Accuracy")

plt.show()Classification using support vector machines

Next, we’ll look at another commonly used classification algorithm, and see how it compares. Support Vector Machines (SVM) work in a way that is conceptually similar to your own intuition when first looking at the data. They devise a set of hyperplanes that delineate the parameter space, such that each region contains ideally only observations from one class, and the boundaries fall between classes. One of the core strengths of Support Vector Machines (SVMs) is their ability to handle non-linear relationships between features by transforming the data into a higher-dimensional space. This transformation allows SVMs to find a linear boundary/hyperplane in this new space, which corresponds to a non-linear boundary in the original space.

What are the “trainable parameters” in an SVM? For a linear SVM, the trainable parameters are:

- Weight vector: A vector that defines the orientation of the hyperplane. Its size is equal to the number of features in X.

- Bias: A scalar value that shifts the hyperplane to maximize the margin.

When to Choose SVM Over Decision Tree

-

High-Dimensional Data:

- Why SVM: SVMs excel in high-dimensional spaces because the kernel trick allows them to separate classes even in complex feature spaces without explicitly mapping the data.

- Why Not Decision Tree: Decision trees struggle with high-dimensional data as the number of potential splits grows exponentially, leading to overfitting or underperformance.

-

Accuracy over Interpretbaility:

- Why SVM: SVMs are often considered black-box models, focusing on accuracy rather than interpretability.

- Why Not Decision Tree: Decision trees are typically interpretable, making them better if you need to explain your model.

Standardizing data

Unlike decision trees, SVMs require an additional pre-processing step for our data. We need to standardize or “z-score” it. Our raw data has parameters with different magnitudes such as bill length measured in 10’s of mm’s, whereas body mass is measured in 1000’s of grams. If we trained an SVM directly on this data, it would only consider the parameter with the greatest variance (body mass).

Standarizing maps each parameter to a new range so that it has a mean of 0 and a standard deviation of 1. This places all features on the same playing field, and allows SVM to reveal the most accurate decision boundaries.

When to Standardize Your Data: A Broader Overview

Standardization is an essential preprocessing step for many machine learning models, particularly those that rely on distance-based calculations to make predictions or extract features. These models are sensitive to the scale of the input features because their mathematical foundations involve distances, magnitudes, or directions in the feature space. Without standardization, features with larger ranges can dominate the calculations, leading to suboptimal results. However, not all models require standardization; some, like decision trees, operate on thresholds and are unaffected by feature scaling. Here’s a breakdown of when to standardize, explicitly explaining the role of distance-based calculations in each case.

When to Standardize: Models That Use Distance-Based Calculations

Support Vector Machines (SVMs): SVMs calculate the distance of data points to a hyperplane and aim to maximize the margin (the distance between the hyperplane and the nearest points, called support vectors).

k-Nearest Neighbors (k-NN): k-NN determines class or value predictions based on the distance between a query point and its k-nearest neighbors in the feature space.

Logistic Regression with Regularization: Regularization terms (e.g., L1 or L2 penalties) involve calculating the magnitude (distance) of the parameter vector to reduce overfitting and encourage simplicity.

Principal Component Analysis (PCA): PCA identifies principal components by calculating the Euclidean distance from data points to the axes representing the highest variance directions in the feature space.

Neural Networks (NNs): Neural networks rely on gradient-based optimization to learn weights. If input features have vastly different scales, gradients can become unstable, slowing down training or causing convergence issues. Standardizing or normalizing (scaling from 0 to 1) features ensures that all inputs contribute equally to the optimization process.

Linear Regression (for Interpreting Many Coefficients): While linear regression itself doesn’t rely on distance-based calculations, standardization is crucial when interpreting coefficients because it ensures that all features are on the same scale, making their relative importance directly comparable. Without standardization, coefficients in linear regression reflect the relationship between the dependent variable and a feature in the units of that feature, making it difficult to compare features with different scales (e.g., height in centimeters vs. weight in kilograms).

When to Skip Standardization: Models That Don’t Use Distance-Based Calculations

Decision Trees: Decision trees split data based on thresholds, independent of feature scales, without relying on any distance-based calculations.

Random Forests: Random forests aggregate decisions from multiple trees, which also use thresholds rather than distance-based metrics.

Gradient Boosted Trees: Gradient boosting optimizes decision trees sequentially, focusing on residuals and splits rather than distance measures.

By understanding whether a model relies on distance-based calculations (or benefits from standardized features for interpretability), you can decide whether standardization is necessary, ensuring that your preprocessing pipeline is well-suited to the algorithm you’re using.

PYTHON

# Extract clean set of data

feature_names = ['bill_length_mm', 'bill_depth_mm', 'flipper_length_mm', 'body_mass_g']

dataset.dropna(subset=feature_names, inplace=True)

class_names = dataset['species'].unique()

X = dataset[feature_names]

y = dataset['species']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)PYTHON

from sklearn import preprocessing

import pandas as pd

scalar = preprocessing.StandardScaler()

scalar.fit(X_train)

X_train_scaled = pd.DataFrame(scalar.transform(X_train), columns=X_train.columns, index=X_train.index)

X_test_scaled = pd.DataFrame(scalar.transform(X_test), columns=X_test.columns, index=X_test.index)Note that we fit the scalar to our training data - we then use this same pre-trained scalar to transform our testing data.

With this scaled data, training the models works exactly the same as before.

PYTHON

from sklearn import svm

SVM = svm.SVC(kernel='poly', degree=3, C=1.5)

SVM.fit(X_train_scaled, y_train)

svm_score = SVM.score(X_test_scaled, y_test)

print("Decision tree score is ", clf_score)

print("SVM score is ", svm_score)We can again visualise the decision space produced, also using only two parameters:

PYTHON

x2 = X_train_scaled[[feature_names[0], feature_names[1]]]

SVM = svm.SVC(kernel='poly', degree=3, C=1.5)

SVM.fit(x2, y_train)

DecisionBoundaryDisplay.from_estimator(SVM, x2) #, ax=ax

sns.scatterplot(x2, x=feature_names[0], y=feature_names[1], hue=dataset['species'])

plt.show()SVM parameters:

-

kernel: Specifies how the SVM transforms the data to find patterns; start with'rbf'for most cases,'linear'for high-dimensional data, or'poly'for polynomial relationships.- Linear Kernel: Directly computes the dot product between input vectors; best for linearly separable data and high-dimensional spaces, offering simplicity and efficiency.

- Poly Kernel: Computes polynomial relationships of features, allowing for flexible decision boundaries; ideal for data with polynomial patterns.

- RBF (Radial Basis Function) Kernel: Uses a Gaussian function to create highly flexible decision boundaries; effective for non-linear, complex data.

-

degree: Sets the complexity of the polynomial kernel; usedegree=3for cubic relationships, and avoid going higher unless you have lots of data. -

C: Balances smoothness of the decision boundary and misclassifications; start withC=1, increase for tighter boundaries, decrease to prevent overfitting.

While this SVM model performs slightly worse than our decision tree (95.6% vs. 98.5%), it’s likely that the non-linear boundaries will perform better when exposed to more and more real data, as decision trees are prone to overfitting and requires complex linear models to reproduce simple non-linear boundaries. It’s important to pick a model that is appropriate for your problem and data trends!

- Classification is a supervised learning task where the goal is to predict discrete class labels from labeled examples.

- Train/test splits let us estimate how well a classifier will generalize to unseen data; for classification, stratifying by class is often important.

- Decision trees are easy to train and interpret, but can overfit when depth and other hyperparameters are not controlled.

- Hyperparameters (such as

max_depth) control model complexity and behavior but are not learned directly from the data. - Models that rely on distances or geometric margins in feature space (such as SVMs) usually require standardized inputs; tree-based models typically do not.

- Comparing different classifiers (for example, decision trees vs SVMs) on the same train/test split helps reveal tradeoffs between accuracy, robustness, and interpretability.

Content from Ensemble methods

Last updated on 2025-11-07 | Edit this page

Overview

Questions

- What are ensemble methods, and why might they outperform a single model?

- How do stacking, bagging, and boosting differ conceptually and in practice?

- How can we use random forests in Scikit-Learn to improve on a single decision tree for classification?

- How can we combine different regression models into a single ensemble using VotingRegressor?

Objectives

- Define ensemble methods and explain why combining multiple models can reduce overfitting and improve robustness.

- Distinguish between stacking, bagging, and boosting and describe when each might be appropriate.

- Train and evaluate a RandomForestClassifier on the penguins dataset and compare its performance to a single decision tree.

- Inspect individual trees within a random forest and visualize decision boundaries to build intuition about how bagging works.

- Use Scikit-Learn’s VotingRegressor to stack multiple regression models (for example, random forest, gradient boosting, and linear regression) on the California housing dataset.

- Compare the performance of individual regressors to the stacked ensemble and interpret differences in test scores.

- Relate ensemble methods to bias–variance tradeoffs and overfitting, and discuss when ensembles are likely to be helpful in practice.

Ensemble methods

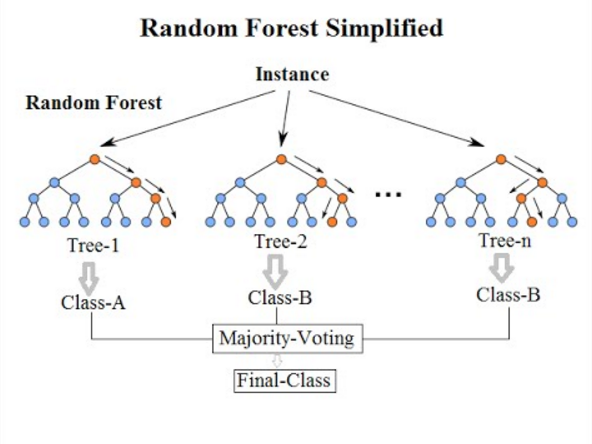

What’s better than one decision tree? Perhaps two? or three? How about enough trees to make up a forest? Ensemble methods bundle individual models together and use each of their outputs to contribute towards a final consensus for a given problem. Ensemble methods are based on the mantra that the whole is greater than the sum of the parts.

Thinking back to the classification episode with decision trees we quickly stumbled into the problem of overfitting our training data. If we combine predictions from a series of over/under fitting estimators then we can often produce a better final prediction than using a single reliable model - in the same way that humans often hear multiple opinions on a scenario before deciding a final outcome. Decision trees and regressions are often very sensitive to training outliers and so are well suited to be a part of an ensemble.

Ensemble methods are used for a variety of applciations including, but not limited to, search systems and object detection. We can use any model/estimator available in sci-kit learn to create an ensemble. There are three main methods to create ensembles approaches:

- Stacking

- Bagging

- Boosting

Let’s explore them in a bit more depth.

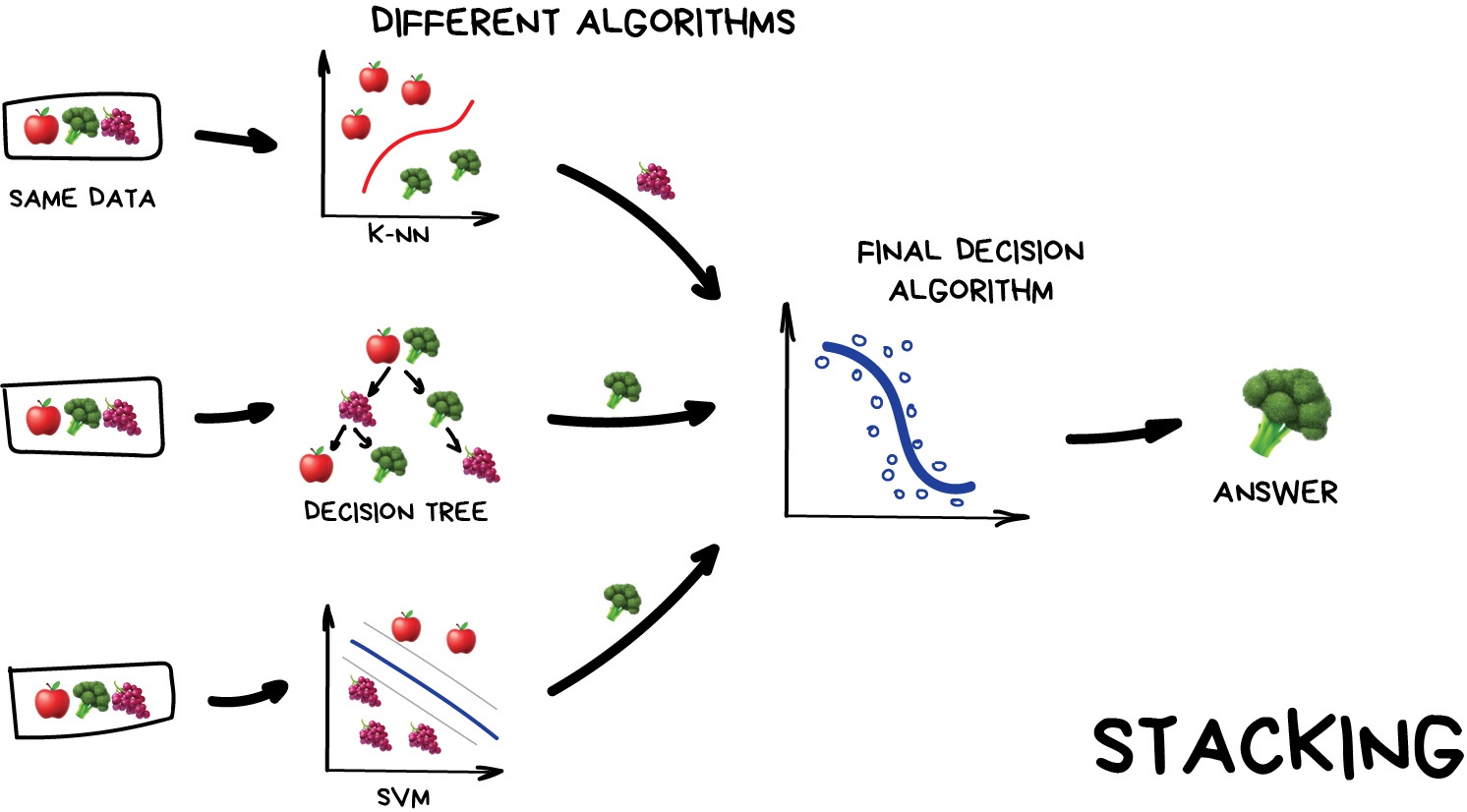

Stacking

This is where we train a series of different models/estimators on the same input data in parallel. We then take the output of each model and pass them into a final decision algorithm/model that makes the final prediction.

If we trained the same model multiple times on the same data we would expect very similar answers, and so the emphasis with stacking is to choose different models that can be used to build up a reliable concensus. Regression is then typically a good choice for the final decision-making model.

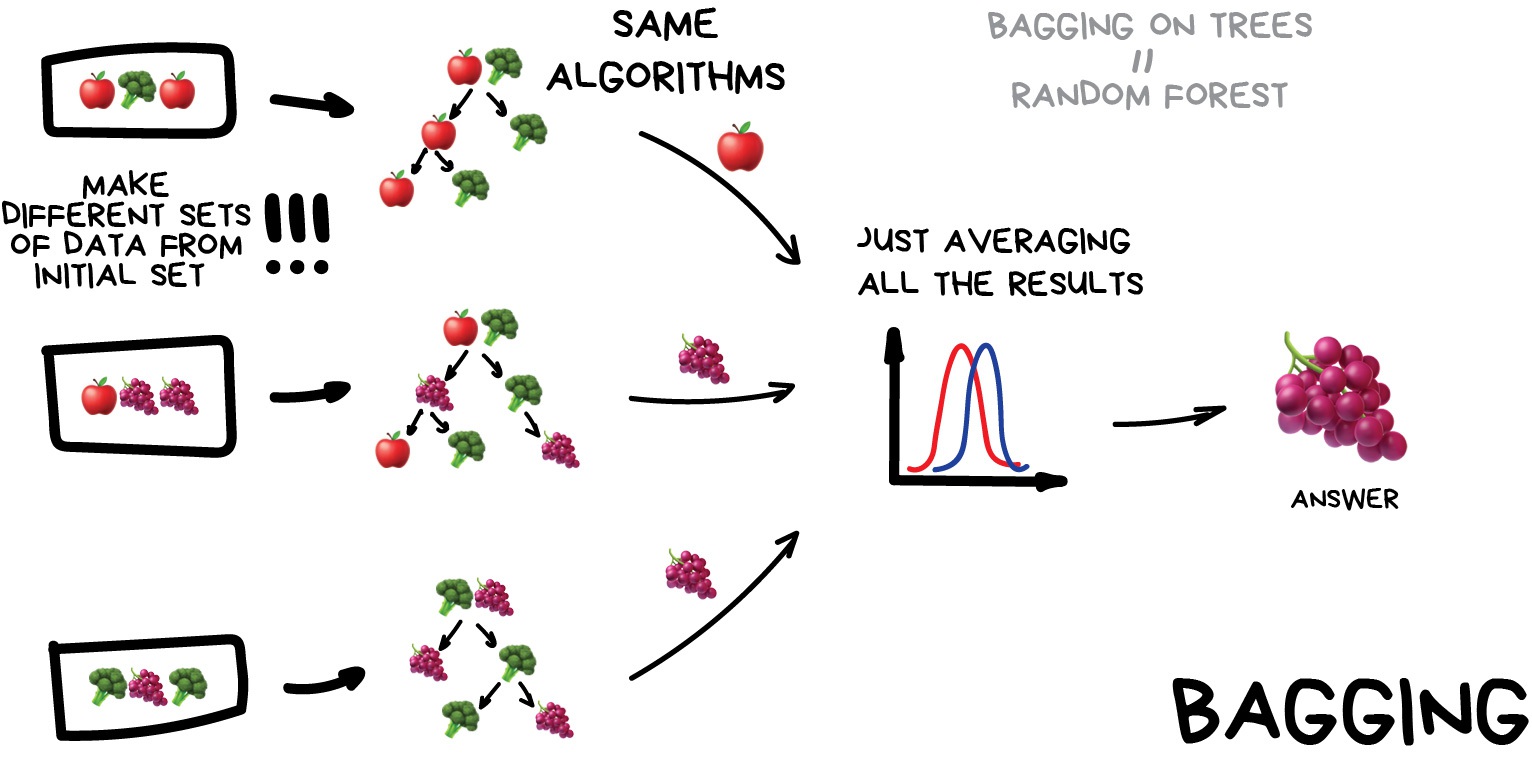

Bagging (a.k.a Bootstrap AGGregatING )

This is where we use the same model/estimator and fit it on different subsets of the training data. We can then average the results from each model to produce a final prediction. The subsets are random and may even repeat themselves.

How to remember: The name comes from combining two ideas, bootstrap (random sampling with replacement) and aggregating (combining predictions). Imagine putting your data into a “bag,” pulling out random samples (with replacement), training models on those samples, and combining their outputs.

The most common example is known as the Random Forest algorithm, which we’ll take a look at later on. Random Forests are typically used as a faster, computationally cheaper alternative to Neural Networks, which is ideal for real-time applications like camera face detection prompts.

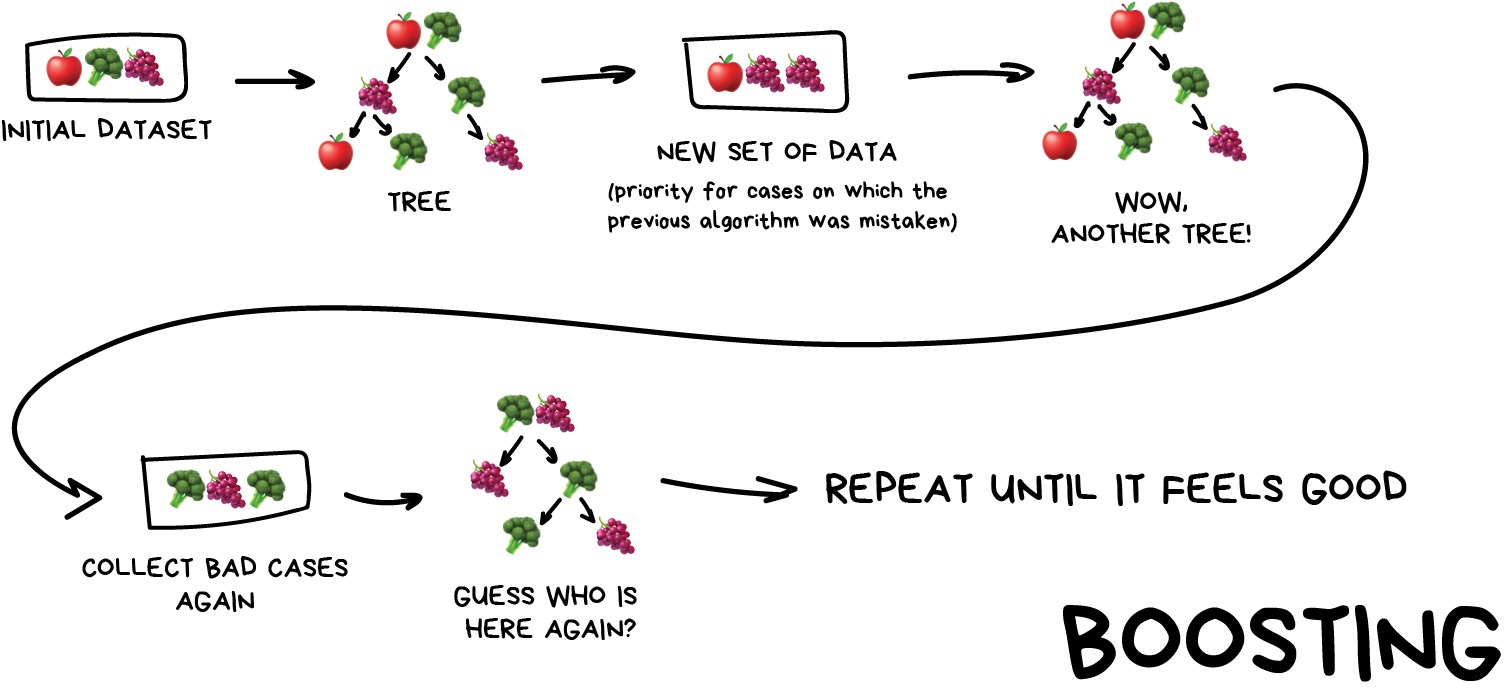

Boosting

This is where we train a single type of Model/estimator on an initial dataset, test it’s accuracy, and then subsequently train the same type of models on poorly predicted samples i.e. each new model pays most attention to data that were incorrectly predicted by the last one.

Just like for bagging, boosting is trained mostly on subsets, however in this case these subsets are not randomly generated but are instead built using poorly estimated predictions. Boosting can produce some very high accuracies by learning from it’s mistakes, but due to the iterative nature of these improvements it doesn’t parallelize well unlike the other ensemble methods. Despite this it can still be a faster, and computationally cheaper alternative to Neural Networks.

Ensemble summary

Machine learning jargon can often be hard to remember, so here is a quick summary of the 3 ensemble methods:

- Stacking - same dataset, different models, trained in parallel

- Bagging - different subsets, same models, trained in parallel

- Boosting - subsets of bad estimates, same models, trained in series

Which ensemble method is best?

| Ensemble method | What it does | Best for | Avoid if |

|---|---|---|---|

| Stacking | Combines predictions from different models trained on the same dataset using a meta-model. | Leveraging diverse models to improve overall performance. | You need simple and fast models or lack diverse base learners. |

| Bagging | Trains the same model on different subsets of the data (via bootstrapping) and averages their results. | Reducing variance (e.g., overfitting) and stabilizing predictions in noisy/small datasets. | The problem requires reducing bias or the base model is already stable (e.g., linear regression). |

| Boosting | Sequentially trains models, focusing on correcting errors made by previous models. | Capturing complex patterns in large datasets and achieving the highest possible accuracy. | The dataset is small or noisy, or you lack computational resources. |

Using Bagging (Random Forests) for a classification problem

In this session we’ll take another look at the penguins data and applying one of the most common bagging approaches, random forests, to try and solve our species classification problem. First we’ll load in the dataset and define a train and test split.

PYTHON

# import libraries

import numpy as np

import pandas as pd

import seaborn as sns

from sklearn.model_selection import train_test_split

# load penguins data

penguins = sns.load_dataset('penguins')

# prepare and define our data and targets

feature_names = ['bill_length_mm', 'bill_depth_mm', 'flipper_length_mm', 'body_mass_g']

penguins.dropna(subset=feature_names, inplace=True)

species_names = penguins['species'].unique()

X = penguins[feature_names]

y = penguins.species

# Split data in training and test set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=5, stratify=y)

print("train size:", X_train.shape)

print("test size", X_test.shape)

X_train.head()We’ll now take a look how we can use ensemble methods to perform a classification task such as identifying penguin species! We’re going to use a Random forest classifier available in scikit-learn which is a widely used example of a bagging approach.

Random forests are built on decision trees and can provide another way to address over-fitting. Rather than classifying based on one single decision tree (which could overfit the data), an average of results of many trees can be derived for more robust/accurate estimates compared against single trees used in the ensemble.

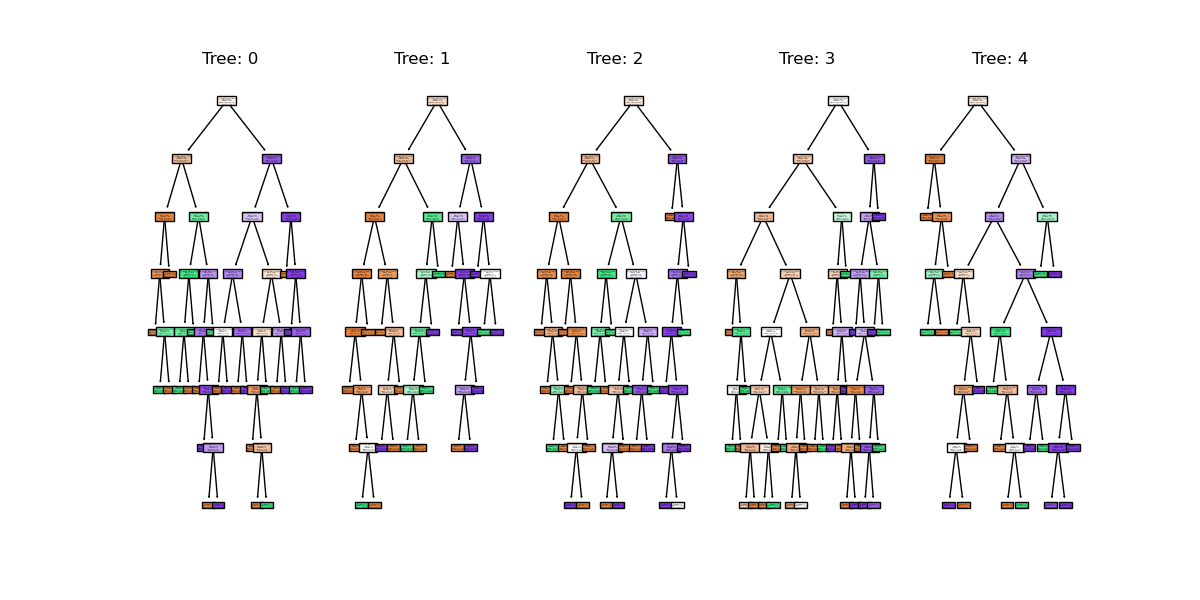

We can now define a random forest estimator and train it using the penguin training data. We have a similar set of attritbutes to the DecisionTreeClassifier but with an extra parameter called n_estimators which is the number of trees in the forest.

PYTHON

from sklearn.ensemble import RandomForestClassifier

from sklearn.tree import plot_tree

# Define our model

# extra parameter called n_estimators which is number of trees in the forest

# a leaf is a class label at the end of the decision tree

forest = RandomForestClassifier(n_estimators=100, max_depth=5, min_samples_leaf=1, random_state=0)

# train our model

forest.fit(X_train, y_train)

# Score our model

print(forest.score(X_test, y_test))You might notice that we have a different value (hopefully increased) compared with the decision tree classifier used above on the same training data. Lets plot the first 5 trees in the forest to get an idea of how this model differs from a single decision tree.

PYTHON

import matplotlib.pyplot as plt

fig, axes = plt.subplots(nrows=1, ncols=5 ,figsize=(12,6))

# plot first 5 trees in forest

for index in range(0, 5):

plot_tree(forest.estimators_[index],

class_names=species_names,

feature_names=feature_names,

filled=True,

ax=axes[index])

axes[index].set_title(f'Tree: {index}')

plt.show()

We can see the first 5 (of 100) trees that were fitted as part of the forest.

How votes are averaged in scikit-learn’s Random Forest

In scikit-learn, the RandomForestClassifier aggregates votes using averaged class probabilities rather than a strict majority vote.

- Each decision tree produces a probability distribution over classes for a given input (based on the proportion of samples from each class in the leaf node where that input ends up).

- The forest then takes the mean of those probabilities across all trees.

- The predicted class is the one with the highest average probability.

While traditional random forests are often described as using a

“majority vote”, scikit-learn implements what’s called soft

voting—averaging probabilities instead of labels.

This provides smoother decision boundaries and can improve

calibration.

If we train the random forest estimator using the same two features from our decision tree example — what do we think the plot will look like?

PYTHON

# lets train a random forest for only two features (body mass and bill length)

from sklearn.inspection import DecisionBoundaryDisplay

f1 = feature_names[0]

f2 = feature_names[3]

# plot classification space for body mass and bill length with random forest

forest_2d = RandomForestClassifier(n_estimators=100, max_depth=5, min_samples_leaf=1, random_state=0)

forest_2d.fit(X_train[[f1, f2]], y_train)

# Lets plot the decision boundaries made by the model for the two trained features

d = DecisionBoundaryDisplay.from_estimator(forest_2d, X_train[[f1, f2]], grid_resolution=500))

sns.scatterplot(X_train, x=f1, y=f2, hue=y_train, palette="husl")

plt.show()

There is still some overfitting indicated by the regions that contain only single points but using the same hyper-parameter settings used to fit the decision tree classifier, we can see that overfitting is reduced.

Stacking a regression problem

We’ve had a look at a bagging approach, but we’ll now take a look at a stacking approach and apply it to a regression problem. We’ll also introduce a new dataset to play around with.

California house price prediction

The California housing dataset for regression problems contains 8 training features such as, Median Income, House Age, Average Rooms, Average Bedrooms etc. for 20,640 properties. The target variable is the median house value for those 20,640 properties, note that all prices are in units of $100,000. This toy dataset is available as part of the scikit learn library. We’ll start by loading the dataset to very briefly inspect the attributes by printing them out.

PYTHON

import sklearn

from sklearn.datasets import fetch_california_housing

# load the dataset

X, y = fetch_california_housing(return_X_y=True, as_frame=True)

## All price variables are in units of $100,000

print(X.shape)

print(X.head())

print("Housing price as the target: ")

## Target is in units of $100,000

print(y.head())

print(y.shape)For the the purposes of learning how to create and use ensemble methods and since it is a toy dataset, we will blindly use this dataset without inspecting it, cleaning or pre-processing it further.

Exercise: Investigate and visualise the dataset

For this episode we simply want to learn how to build and use an Ensemble rather than actually solve a regression problem. To build up your skills as an ML practitioner, investigate and visualise this dataset. What can you say about the dataset itself, and what can you summarise about about any potential relationships or prediction problems?

Lets start by splitting the dataset into training and testing subsets:

PYTHON

# split into train and test sets, We are selecting an 80%-20% train-test split.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=5)

print(f'train size: {X_train.shape}')

print(f'test size: {X_test.shape}')Lets stack a series of regression models. In the same way the RandomForest classifier derives a results from a series of trees, we will combine the results from a series of different models in our stack. This is done using what’s called an ensemble meta-estimator called a VotingRegressor.

We’ll apply a Voting regressor to a random forest, gradient boosting and linear regressor.

But wait, aren’t random forests/decision tree for classification problems?

Yes they are, but quite often in machine learning various models can be used to solve both regression and classification problems.

Decision trees in particular can be used to “predict” specific numerical values instead of categories, essentially by binning a group of values into a single value.

This works well for periodic/repeating numerical data. These trees are extremely sensitive to the data they are trained on, which makes them a very good model to use as a Random Forest.

But wait again, isn’t a random forest (and a gradient boosting model) an ensemble method instead of a regression model?

Yes they are, but they can be thought of as one big complex model used like any other model. The awesome thing about ensemble methods, and the generalisation of Scikit-Learn models, is that you can put an ensemble in an ensemble!

A VotingRegressor can train several base estimators on the whole dataset, and it can take the average of the individual predictions to form a final prediction.

PYTHON

from sklearn.ensemble import (

GradientBoostingRegressor,

RandomForestRegressor,

VotingRegressor,

)

from sklearn.linear_model import LinearRegression

# Initialize estimators

rf_reg = RandomForestRegressor(random_state=5)

gb_reg = GradientBoostingRegressor(random_state=5)

linear_reg = LinearRegression()

voting_reg = VotingRegressor([("rf", rf_reg), ("gb", gb_reg), ("lr", linear_reg)])

# fit/train voting estimator

voting_reg.fit(X_train, y_train)

# lets also fit/train the individual models for comparison

rf_reg.fit(X_train, y_train)

gb_reg.fit(X_train, y_train)

linear_reg.fit(X_train, y_train)We fit the voting regressor in the same way we would fit a single model. When the voting regressor is instantiated we pass it a parameter containing a list of tuples that contain the estimators we wish to stack: in this case the random forest, gradient boosting and linear regressors. To get a sense of what this is doing lets predict the first 20 samples in the test portion of the data and plot the results.

PYTHON

import matplotlib.pyplot as plt

# make predictions

X_test_20 = X_test[:20] # first 20 for visualisation

rf_pred = rf_reg.predict(X_test_20)

gb_pred = gb_reg.predict(X_test_20)

linear_pred = linear_reg.predict(X_test_20)

voting_pred = voting_reg.predict(X_test_20)

plt.figure()

plt.plot(gb_pred, "o", color="black", label="GradientBoostingRegressor")

plt.plot(rf_pred, "o", color="blue", label="RandomForestRegressor")

plt.plot(linear_pred, "o", color="green", label="LinearRegression")

plt.plot(voting_pred, "x", color="red", ms=10, label="VotingRegressor")

plt.tick_params(axis="x", which="both", bottom=False, top=False, labelbottom=False)

plt.ylabel("predicted")

plt.xlabel("training samples")

plt.legend(loc="best")

plt.title("Regressor predictions and their average")

plt.show()Finally, lets see how the average compares against each single estimator in the stack?

PYTHON

print(f'random forest: {rf_reg.score(X_test, y_test)}')

print(f'gradient boost: {gb_reg.score(X_test, y_test)}')

print(f'linear regression: {linear_reg.score(X_test, y_test)}')

print(f'voting regressor: {voting_reg.score(X_test, y_test)}')Each of our models score between 0.61-0.82, which at the high end is good, but at the low end is a pretty poor prediction accuracy score. Do note that the toy datasets are not representative of real world data. However what we can see is that the stacked result generated by the voting regressor fits different sub-models and then averages the individual predictions to form a final prediction. The benefit of this approach is that, it reduces overfitting and increases generalizability. Of course, we could try and improve our accuracy score by tweaking with our indivdual model hyperparameters, using more advaced boosted models or adjusting our training data features and train-test-split data.

Exercise: Stacking a classification problem.

Scikit learn also has method for stacking ensemble classifiers

sklearn.ensemble.VotingClassifier do you think you could

apply a stack to the penguins dataset using a random forest, SVM and

decision tree classifier, or a selection of any other classifier

estimators available in sci-kit learn?

PYTHON

penguins = sns.load_dataset('penguins')

feature_names = ['bill_length_mm', 'bill_depth_mm', 'flipper_length_mm', 'body_mass_g']

penguins.dropna(subset=feature_names, inplace=True)

species_names = penguins['species'].unique()

# Define data and targets

X = penguins[feature_names]

y = penguins.species

# Split data in training and test set

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=5)

print(f'train size: {X_train.shape}')

print(f'test size: {X_test.shape}')The code above loads the penguins data and splits it into test and

training portions. Have a play around with stacking some classifiers

using the sklearn.ensemble.VotingClassifier using the code

comments below as a guide.

- Ensemble methods combine predictions from multiple models to produce more stable and accurate results than most single models.

- Bagging (such as random forests) trains the same model on different bootstrap samples and averages their predictions, usually reducing variance and overfitting.

- Boosting trains models in sequence, focusing later models on the mistakes of earlier ones, often improving accuracy at the cost of increased complexity and computation.

- Stacking uses a meta-model to combine the outputs of several diverse base models trained on the same data.

- Random forests often outperform single decision trees by averaging many shallow, noisy trees into a more robust classifier.

- VotingRegressor and VotingClassifier provide a simple way to stack multiple estimators in Scikit-Learn for regression or classification tasks.

- Choosing an ensemble method and tuning its hyperparameters is closely tied to the bias–variance tradeoff and the characteristics of the dataset.

Content from Unsupervised methods - Clustering

Last updated on 2025-11-07 | Edit this page

Overview

Questions

- What is unsupervised learning?

- How can we use clustering to find data points with similar attributes?

Objectives

- Understand the difference between supervised and unsupervised learning

- Identify clusters in data using k-means clustering.

- Understand the limitations of k-means when clusters overlap.

- Use spectral clustering to overcome the limitations of k-means.

Unsupervised learning

In episode 2 we learnt about supervised learning. Now it is time to explore unsupervised learning.

Sometimes we do not have the luxury of using labelled data. This could be for a number of reasons:

- We have labelled data, but not enough to accurately train our model

- Our existing labelled data is low-quality or innacurate

- It is too time-consuming to (manually) label more data

- We have data, but no idea what correlations might exist that we could model!

In this case we need to use unsupervised learning. As the name suggests, this time we do not “supervise” the ML algorithm by providing it labels, but instead we let it try to find its own patterns in the data and report back on any correlations that it might find. You can think of unsupervised learning as a way to discover labels from the data itself.

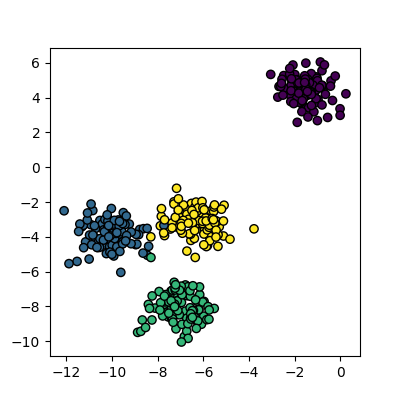

Clustering

Clustering is the grouping of data points which are similar to each other. It can be a powerful technique for identifying patterns in data. Clustering analysis does not usually require any training and is therefore known as an unsupervised learning technique. Clustering can be applied quickly due to this lack of training.

Applications of clustering

- Looking for trends in data

- Reducing the data around a point to just that point (e.g. reducing colour depth in an image)

- Pattern recognition

K-means clustering

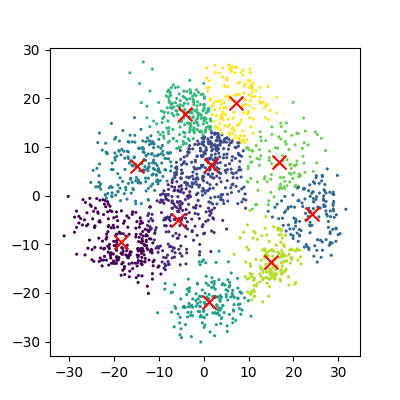

The k-means clustering algorithm is a simple clustering algorithm that tries to identify the centre of each cluster. It does this by searching for a point which minimises the distance between the centre and all the points in the cluster. The algorithm needs to be told how many k clusters to look for, but a common technique is to try different numbers of clusters and combine it with other tests to decide on the best combination.

Hyper-parameters again

‘K’ is also an exmaple of a hyper-parameter for the k-means clustering technique. Another example of a hyper-parameter is the N-degrees of freedom for polynomial regression. Keep an eye out for others throughout the lesson!

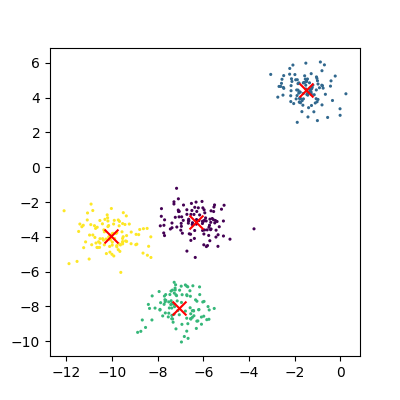

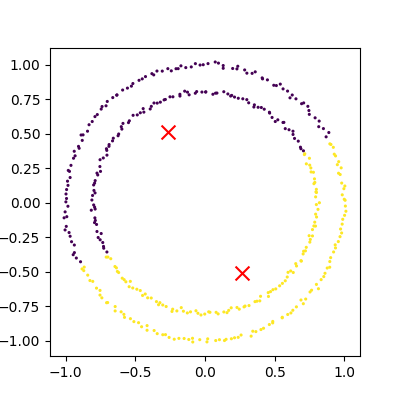

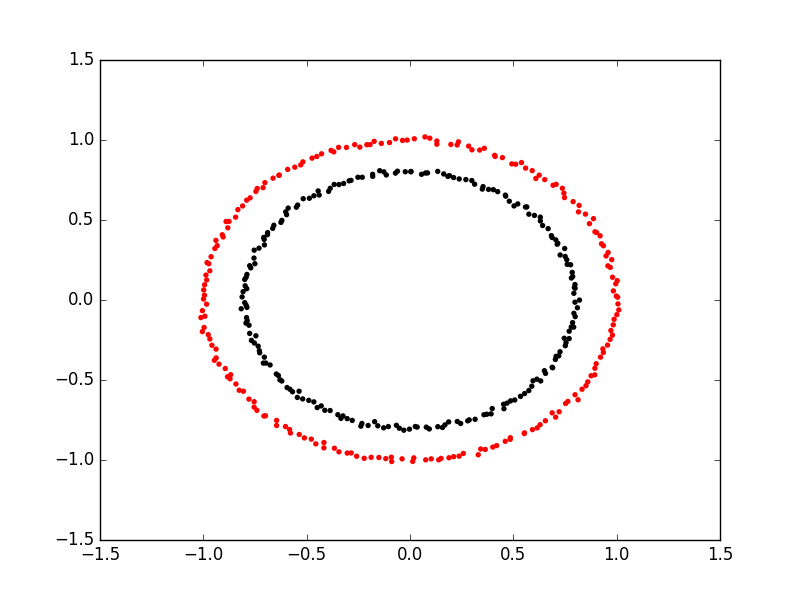

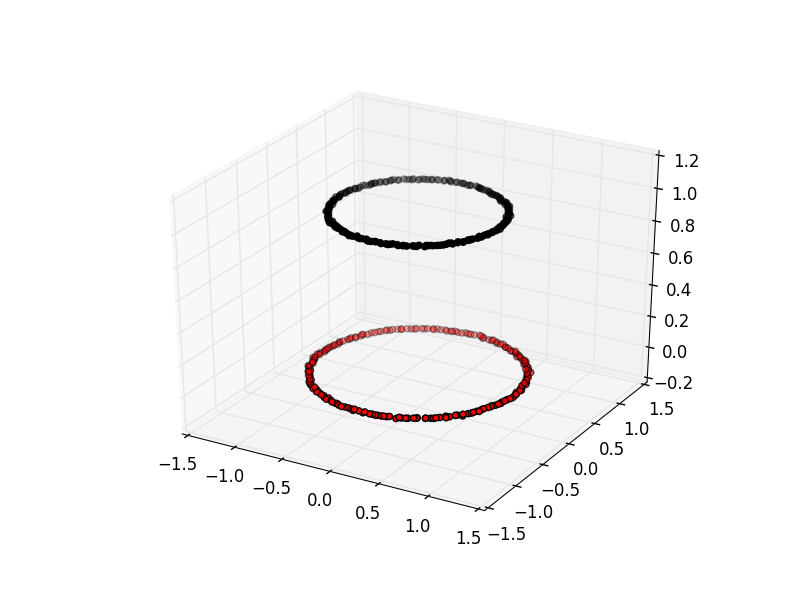

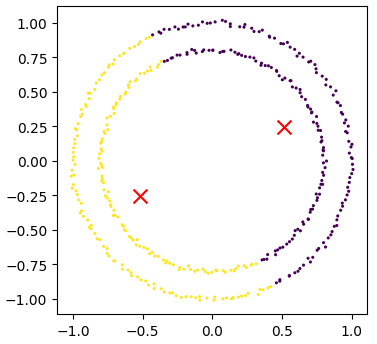

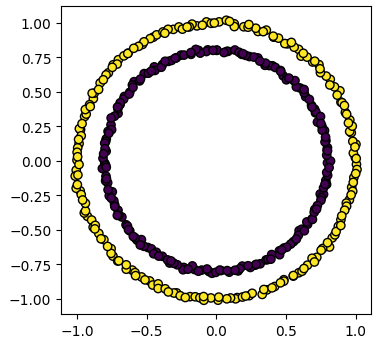

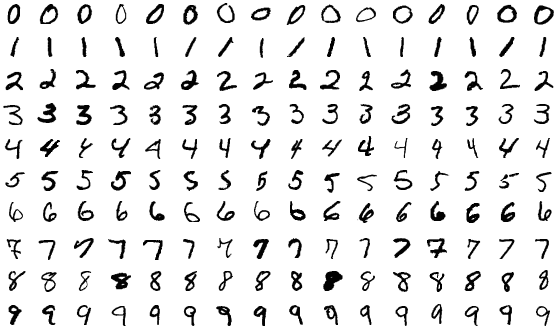

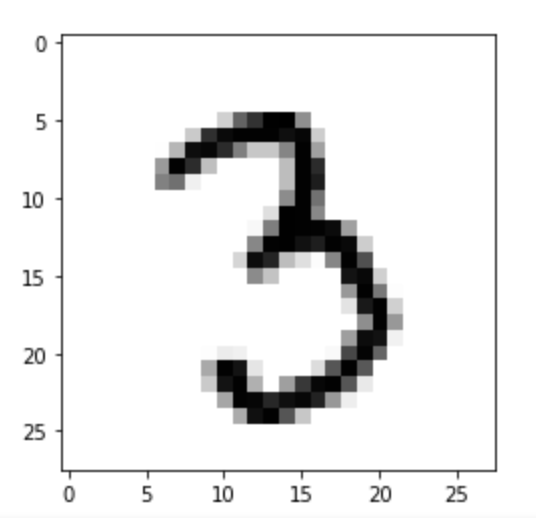

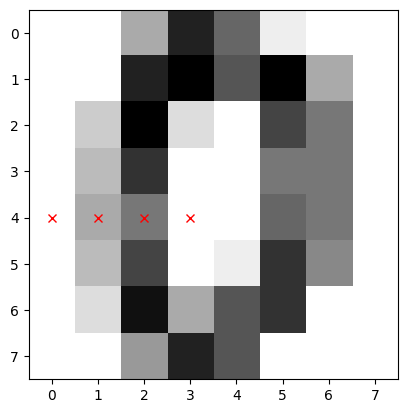

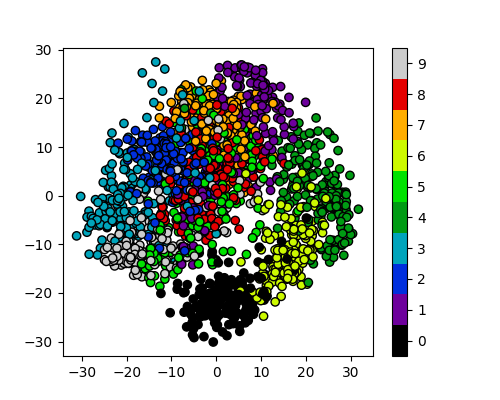

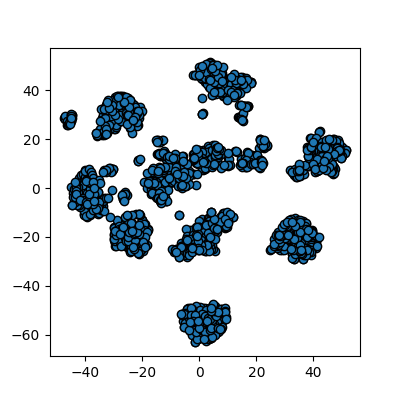

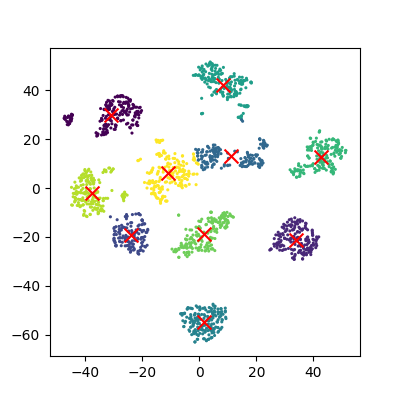

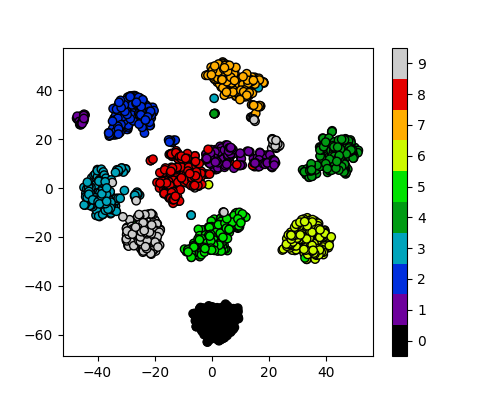

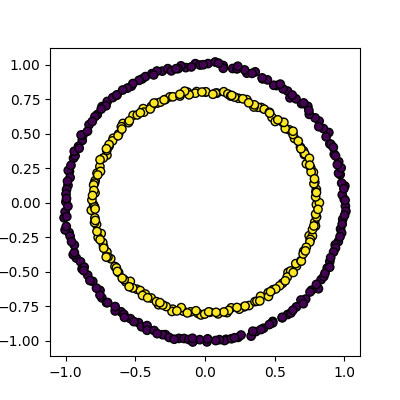

K-means with Scikit-Learn